Rumor mill: It’s an exciting time for PC fans. Not only is Nvidia set to release its consumer Ampere cards within the next few months, but AMD is also launching the RDNA 2 ‘Big Navi’ products. There had been talk of Team Red’s flagship being an “Nvidia Killer” that offered 50 percent more performance than the RTX 2080 Ti, but it seems the card won’t be as monstrous as that claim suggests.

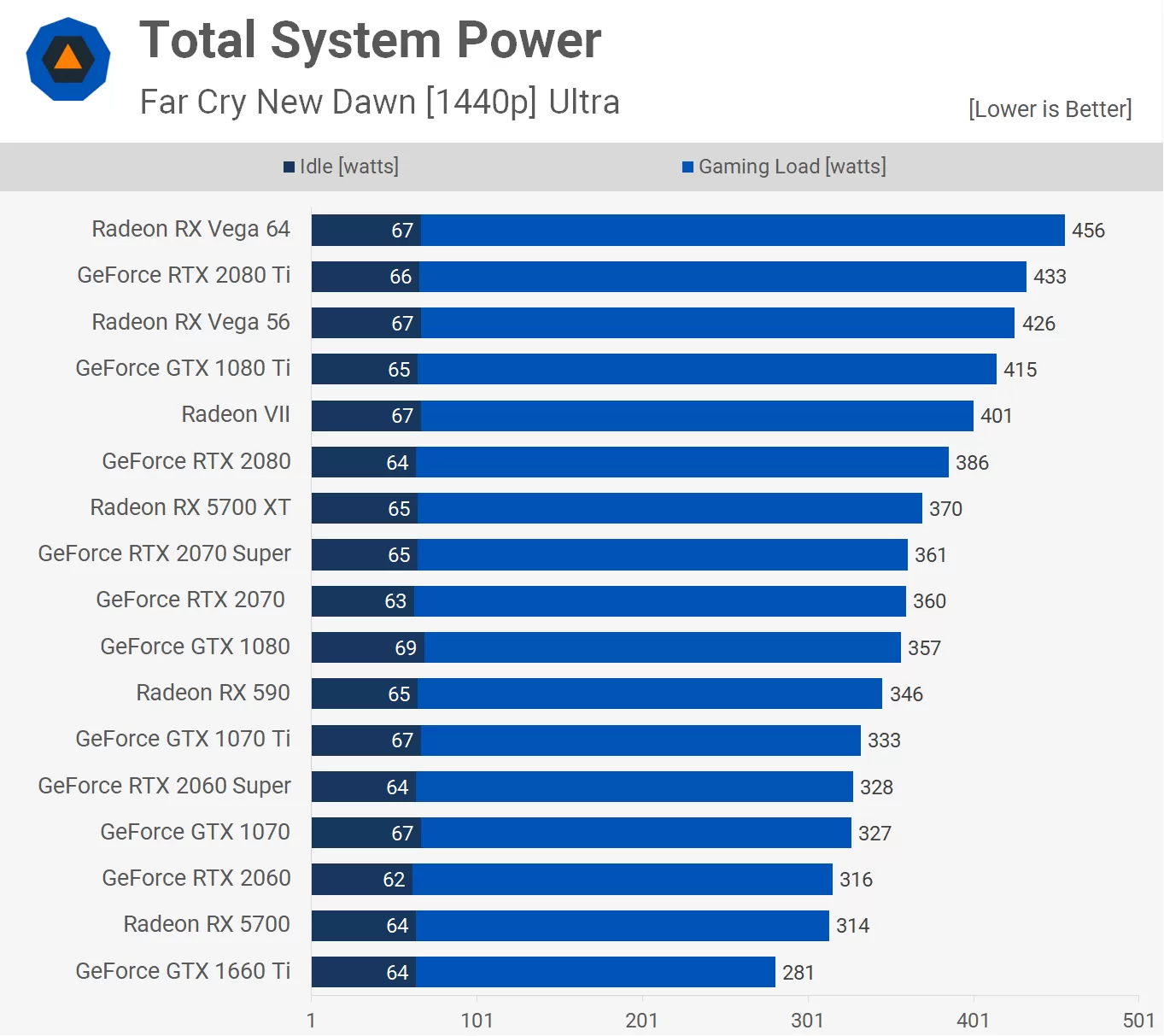

Coreteks, citing sources in Asia, reports that AMD has started sharing details of the RDNA 2-based GPUs with its Add-In Board (AIB) partners. They say the performance level of the high-end card—codenamed Sienna Cichlid—is just 15 percent better than Nvidia's RTX 2080 Ti, and that’s only in select AMD “optimized” games.

It was expected that Big Navi would be a direct competitor to Nvidia’s top-end RTX 3080 Ti, but it seems that the RTX 3080 is a more realistic target. While that might disappoint AMD fans, there is some good news: the company is reportedly going to undercut the RTX 3080, offering gamers what will still be excellent performance for a lower price.

The publication writes that there will be two Big Navi-based cards at launch: the high-end product and a lower-end alternative—much like the 5700XT and 5700—both of which will use GDDR6 memory. There’s also a mid-range product, codenamed Navy Flounder, that looks set to launch early in 2021.

We don't have long to wait before finding out the report's accuracy. Big Navi will reportedly be unveiled in early September, with the cards launching on October 7. Nvidia, meanwhile, is rumored to release the Ampere GPUs on September 17.

https://www.techspot.com/news/86205-no-nvidia-killer-big-navi-best-may-match.html