As artificial intelligence becomes increasingly advanced, we're hearing more warnings about its potential dangers. Elon Musk thinks they'll be the cause of World War 3, but based on a recent study from Stanford University, the systems could pose a more immediate threat when it comes to people's privacy.

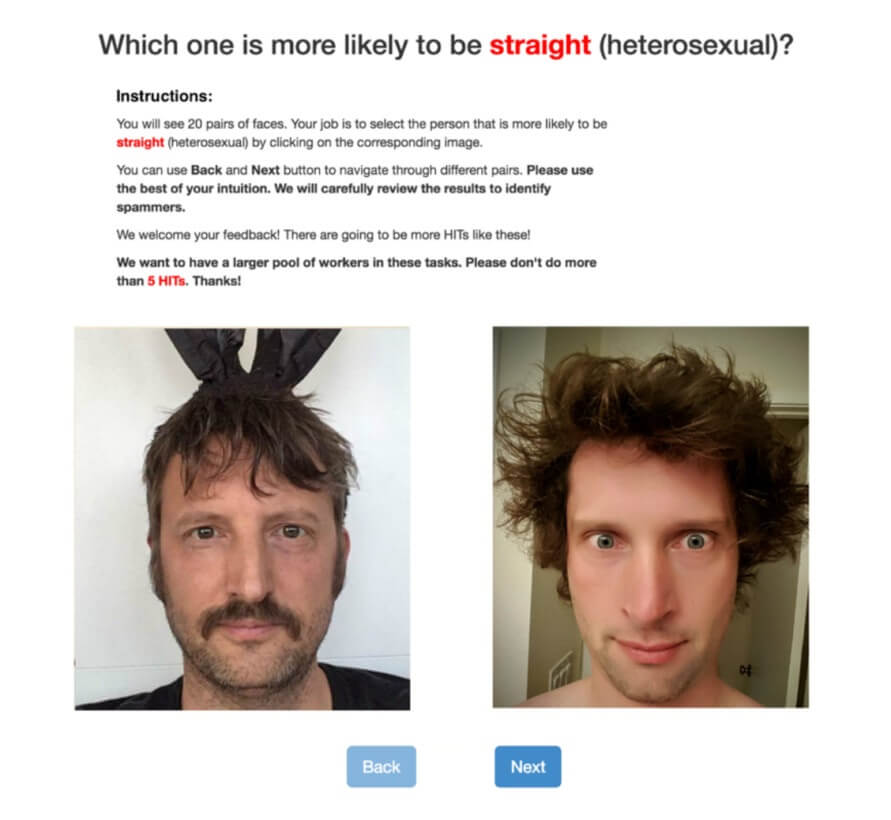

Michal Kosinski and Yilun Wang's algorithm can accurately guess if a person is straight or gay based on a photo of their face. This was achieved using a sample of over 35,000 images that had been publicly posted on a US dating site, with an even representation of gay and straight (determined through their profiles) and male and female subjects.

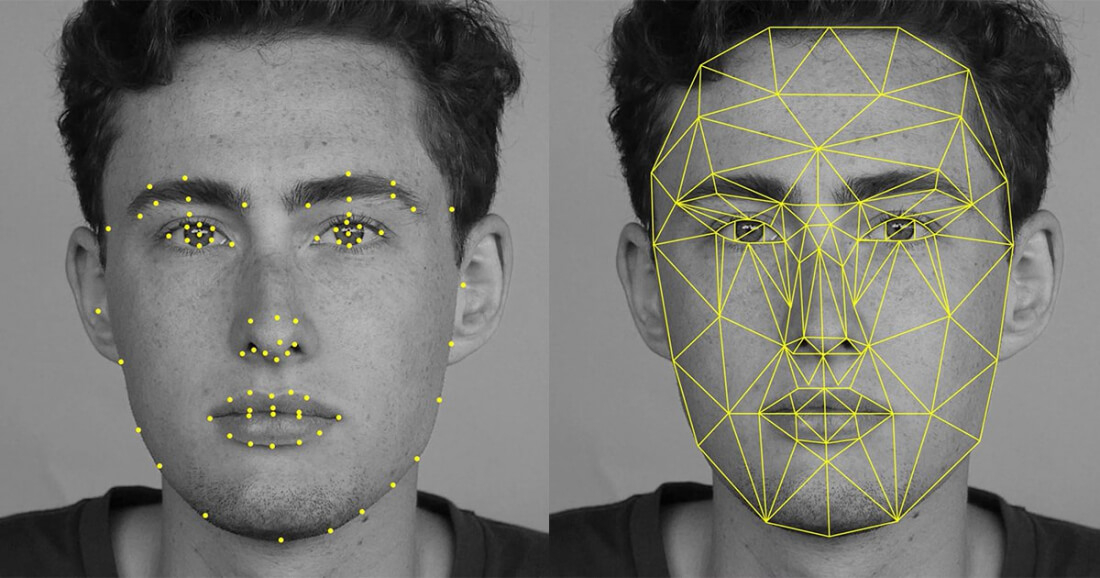

The deep neural networks extracted features from the huge dataset, identifying certain trends to help it determine a person's sexuality. Gay men tended to have "gender-atypical" features, expressions and grooming styles, meaning they appeared more feminine. They often had narrower jaws, longer noses and larger foreheads than straight men. The opposite was true for gay women, who generally had larger jaws and smaller foreheads.

The system was eventually able to distinguish between gay and straight men 81 percent of the time, and 74 percent of the time for women, just by reviewing a photo. When it was shown five images of the same person, accuracy levels went up to 91 percent for men and 83 percent for women. By contrast, a human was only able to identify sexual orientation 61 percent of the time for men and 54 percent for women.

The findings strongly support the theory that the same hormones affecting a fetus' developing bone structure play a part in determining sexuality, meaning that people are born gay - it's not a choice. Additionally, the lower success rate for women could suggest female sexual orientation is more fluid.

What's concerning is the AI's potential for misuse, especially for people who want to keep their sexual preferences private from friends and family. Google results show the phrase "is my husband gay?" is more common than "is my husband having an affair?"

There's also the concern that the software could be used in countries such as Iran, Sudan, Saudi Arabia and Yemen, where homosexuality is punishable by death.

This isn't the first time Kosinski has been linked to controversial research. His work with Cambridge University on psychometric profiling involved using Facebook profiles to create a model of someone's personality. Donald Trump's campaign and Brexit supporters in the UK reportedly used similar tools to target voters and help them achieve victory.