In brief: Several advocacy groups have written an open letter to President Biden and his administration highlighting their concerns over the lack of action when it comes to the massive amount of energy used by generative AI tools and their ability to create environment-related misinformation.

Back in October, the Biden administration attempted to address the difficult issue of regulating artificial intelligence development with an executive order signed by the president that promised to manage the technology's risks. The order covers many areas, including AI's impact on jobs, privacy, security, and more. It also mentions using AI to improve the national grid and review environmental studies to help combat climate change.

Seventeen groups, including Greenpeace USA, the Tech Oversight Project, Friends of the Earth, Amazon Employees for Climate Justice, and the Electronic Privacy Information Center (EPIC), wrote in an open letter to Biden that the executive order does not do enough to address the technology's environmental impact, both literally and through misinformation.

"… due to their enormous energy requirements and the carbon footprint associated with their growth and proliferation, the widespread use of LLMs can increase carbon emissions," the letter states.

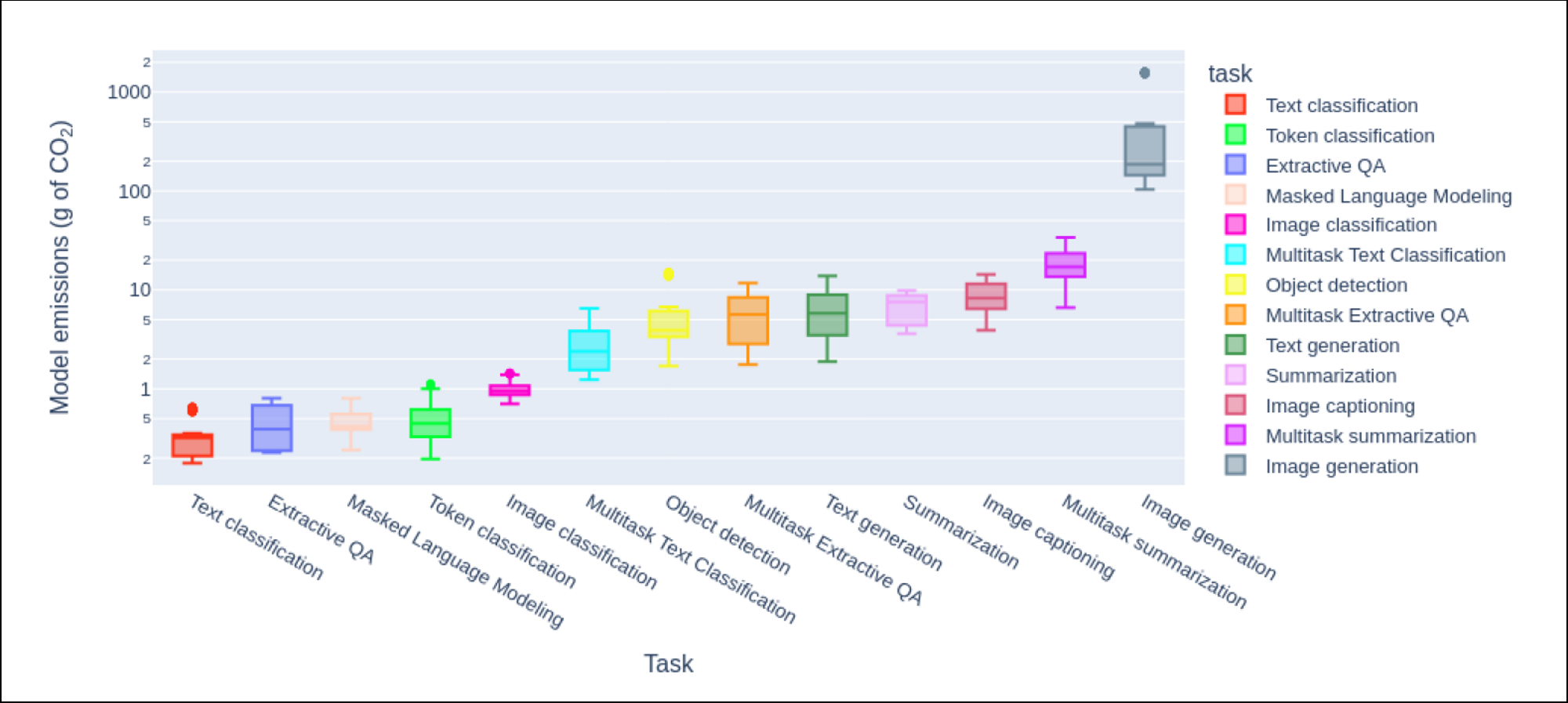

A recently published study from AI startup Hugging Face and Carnegie Mellon University highlighted the massive amount of energy used by generative AI models, both during their training phase and in their frequent daily applications. Using the likes of Stable Diffusion XL to generate 1,000 images causes the same amount of carbon emissions as driving an average gas-powered car 4.1 miles.

"Unfounded hype from Silicon Valley says that AI can save the planet sometime in the future but research shows the opposite is actually occurring right now," the groups wrote.

In addition to concerns about AI's impact on the planet, the groups also expressed concerns about its potential use to spread climate disinformation.

"Generative AI threatens to amplify the types of climate disinformation that have plagued the social media era and slowed efforts to fight climate change." they wrote. "Researchers have been able to easily bypass ChatGPT's safeguards to produce an article from the perspective of a climate change denier that argued global temperatures are actually decreasing."

We've already seen generative AI being used to create disinformation about the upcoming US election and in several other domains, where the primary challenge often lies in convincing people that the generated content is not real.

The groups want the government to require companies to publicly report on energy use and emissions produced by the full life cycle of AI models, including training, updating and search queries. They also request a study into the effect AI systems can have on climate disinformation and the implementation of safeguards against its production. Finally, there's a request for companies to explain to the general public how generative AI models produce information, measure climate change accuracy, and sources of evidence for claims they make.