What just happened? At this year's Hot Chips conference in Palo Alto, California, Intel revealed a ton of information about its 2024 Xeon CPUs for data centers. The company also detailed how it is using AI technology to improve the power efficiency of its next-gen desktop processor lineup.

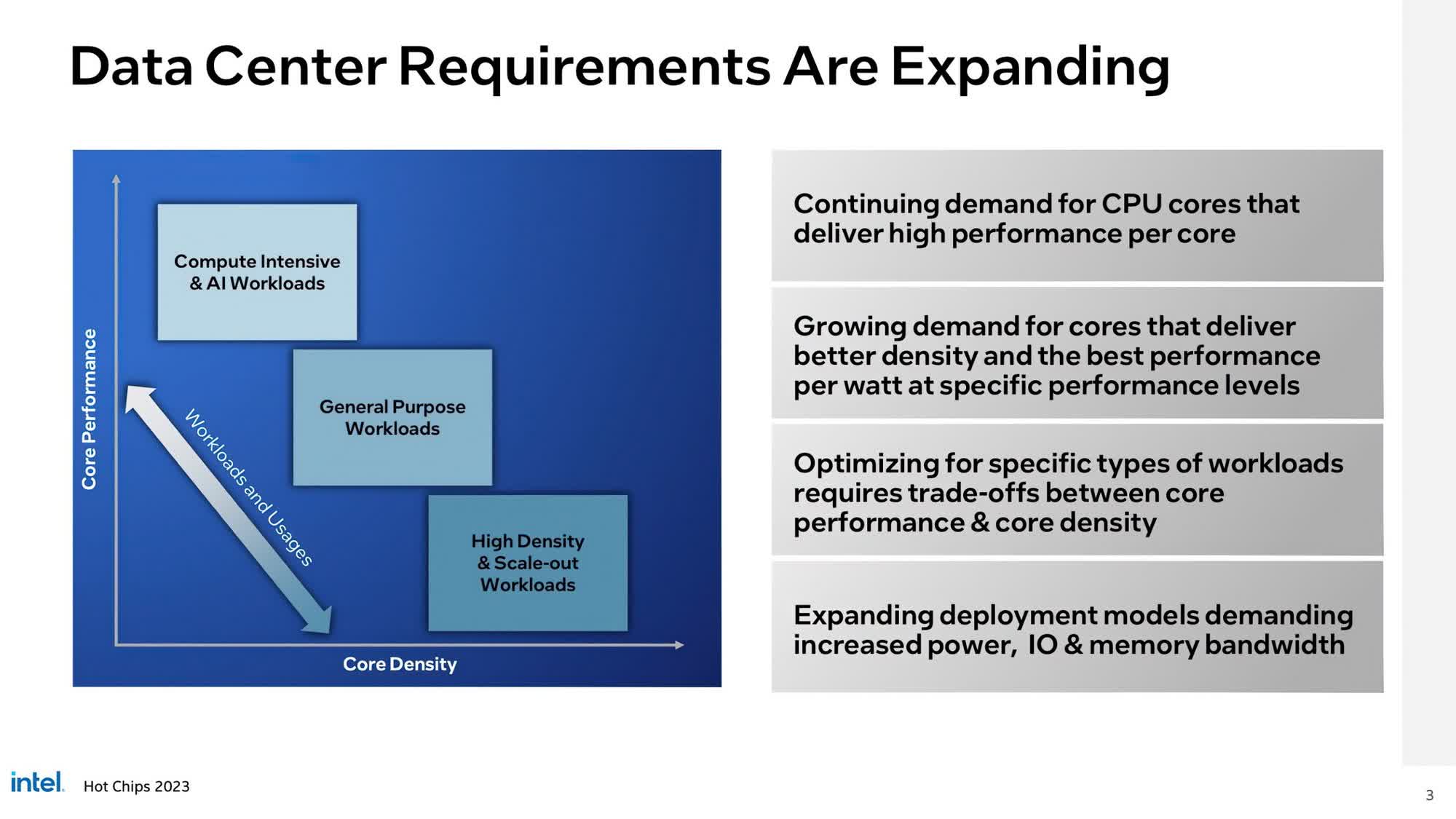

Starting off with the Xeon CPUs, Intel explained how workloads in modern data centers are becoming rather specific with every passing year, requiring different types of chips for different types of jobs. For example, some workloads like AI processing require high performance cores, while others may require trade-offs between core performance and core density.

To address the varying requirements in the sector, Intel's 2024 Xeon CPUs will come in two separate lineups with Performance Cores (P-cores) and Efficient Cores (E-Cores), respectively. While the former is codenamed Granite Rapids and is being optimized for compute-intensive and AI applications, the latter will be part of the Sierra Forest family for high-density and scale-out workloads. Both lineups, however, will share the same platform and software stack, meaning they will be compatible with one another.

Intel also announced that the 2024 Xeon platform will utilize modular SoCs for increased scalability and flexibility. The company believes that the new design will meet the processing and power efficiency needs for AI, cloud and enterprise installations. It will also offer support for up to 12-channel DDR/MCR (1-2DPC) memory and up to 136 PCIe 5.0 lanes with CXL 2.0 and up to six UPI links.

Intel further claimed that the Granite Rapids lineup will bring higher memory bandwidth, core count, and cache for compute intensive workloads, thereby offering 2-3 times better performance for mixed AI applications compared to the Sapphire Rapids family. As for Sierra Forest, Intel claims it will offer 2.5 times better rack density and 2.4 times higher performance per watt compared to Sapphire Rapids. Both will include several SKUs with variable core counts and TDPs.

Finally, Intel also announced that the Meteor Lake family will use AI to manage power to make them more efficient than their predecessors. As reported by PC World, the chips will have a VPU (visual processing unit) that will not only optimize AI workloads but also help improve the battery life of laptops. According to the company, the AI in the new chips will have the ability to decide when to shift between high power (performance) and low power (idling) states as part of a new feature that the company is calling 'Dynamic Voltage and Frequency Scaling,' or DVPS.