Following the discovery that foreign parties used Facebook to spread misinformation during the US' 2016 presidential elections, the platform has promised to eradicate the issue over time.

It seems the company's hard work in this regard is finally beginning to bear fruit - in a blog post published today, Facebook's Product Manager Antonia Woodford discussed some of the major changes the company has made to reduce the spread of "false news."

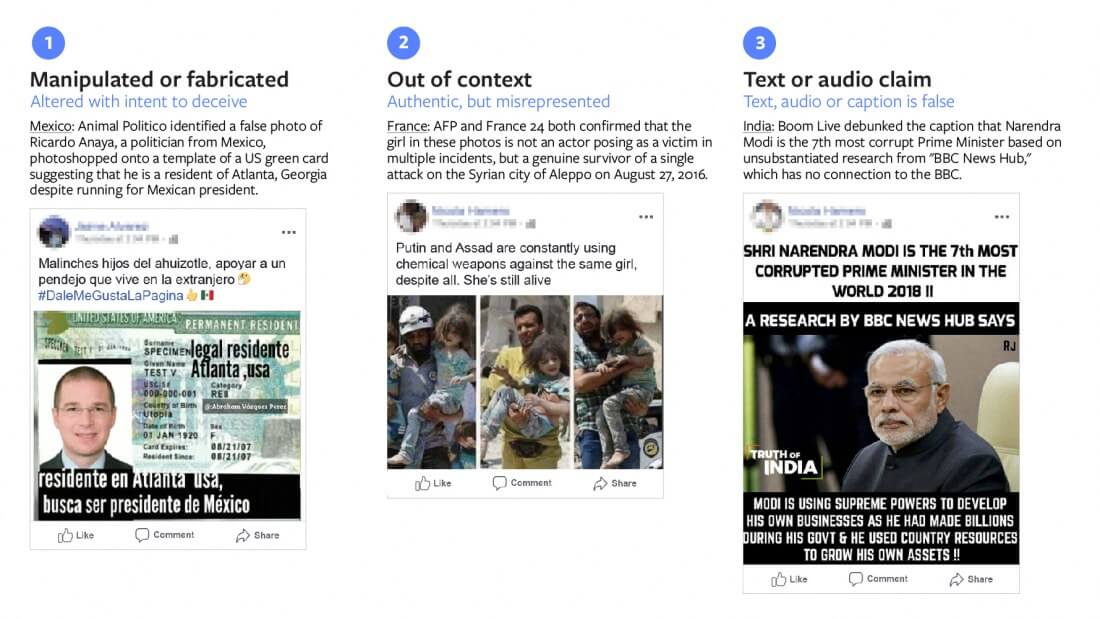

Facebook has already taken the first steps towards accomplishing their goal by partnering with several third-party fact-checkers. These individuals review and rate the accuracy of various pieces of content, including captioned images, and videos.

However, Facebook has been aiming to speed up the process by working behind-the-scenes on "new technology" that uses the power of machine learning to identify "potentially false" content automatically.

The AI does not make any final decisions for itself, though. Instead, it passes it on to one of the previously-mentioned fact checkers for review.

To handle the soon-to-be-increased workload, Facebook has promised to expand its fact-checking programs for photos and videos to 17 countries around the world, bringing on new partners along the way.

So, how does Facebook's new system work? The company's content-flagging AI uses "engagement signals" (most likely shares, comments, likes, or direct feedback) to find content that could be exaggerated or misleading.

Once it does that, fact-checkers will use "visual verification techniques" (such as reverse image searches) and general research strategies to come to a conclusion about the content's accuracy.

Facebook says that the sources fact-checkers use are trustworthy, so they probably won't be relying on Wikipedia for their information.