Why it matters: Deepfakes---a practice in which people's faces are superimposed over the bodies of others using AI and machine learning---can already produce some frighteningly convincing results. Now, researchers are advancing the process even further, thanks to a method that can maker the videos appear even more lifelike and transfer "styles."

Researchers from Carnegie Mellon University and Facebook Reality Lab presented the Recycle-GAN system at the European Conference on Computer Vision (ECCV) in Germany. It builds on the generative adversarial network (GAN) AI algorithm that moves one style of image onto another. Recycle-GAN improves the system by lining up the frames from both videos using "not only spatial, but temporal information." It also uses a discriminator that scores the consistency of the new video against the source material.

"This additional information, accounting for changes over time, further constrains the process and produces better results," wrote the researchers.

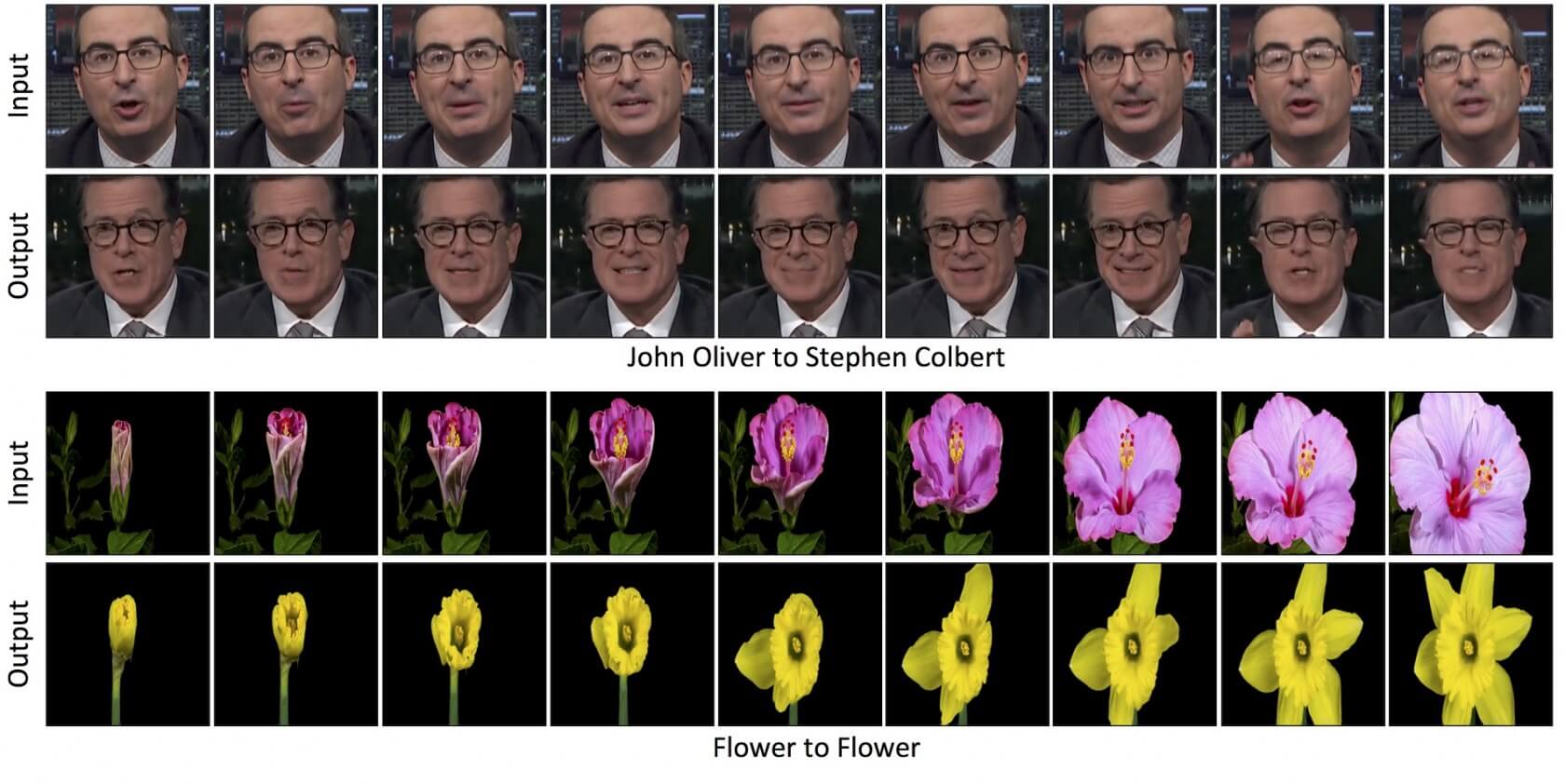

In the YouTube clip, we can see how Stephen Colbert appears to repeat the same lines as John Oliver, with everything from head nodding, eyebrow raises, and the shape of his mouth being imitated. Another video shows the same process applied to a cartoon frog.

The "unsupervised data-driven approach " can also be used in ways that don't involve faces, including blooming flowers, sunrises, sunsets, clouds, and wind. But it's the transfer of human mannerisms that is most impressive, such as "John Oliver's dimple while smiling, the shape of mouth characteristic of Donald Trump, and the facial mouth lines and smile of Stephen Colbert," writes the researchers in a paper.

In addition to Deep Fakes being used to spread fake news, it's commonly used as a way of pasting celebrities' faces onto adult movie stars' bodies, thereby creating fake celeb pornography. Pornhub banned such clips earlier this year after Discord shut down a group that shared the videos.

Last month, the Defense Department produced the first tools for catching deepfake videos.