Why it matters: AI on the battlefield has the potential to allow for faster and more coordinated military strikes and responses. In the future, it could also be used to calculate the enemy's strategy far more accurately and effectively. But imagine if you were trying to live peacefully in a war-torn country with drones flying overhead - would you be able to trust computers not to make a mistake?

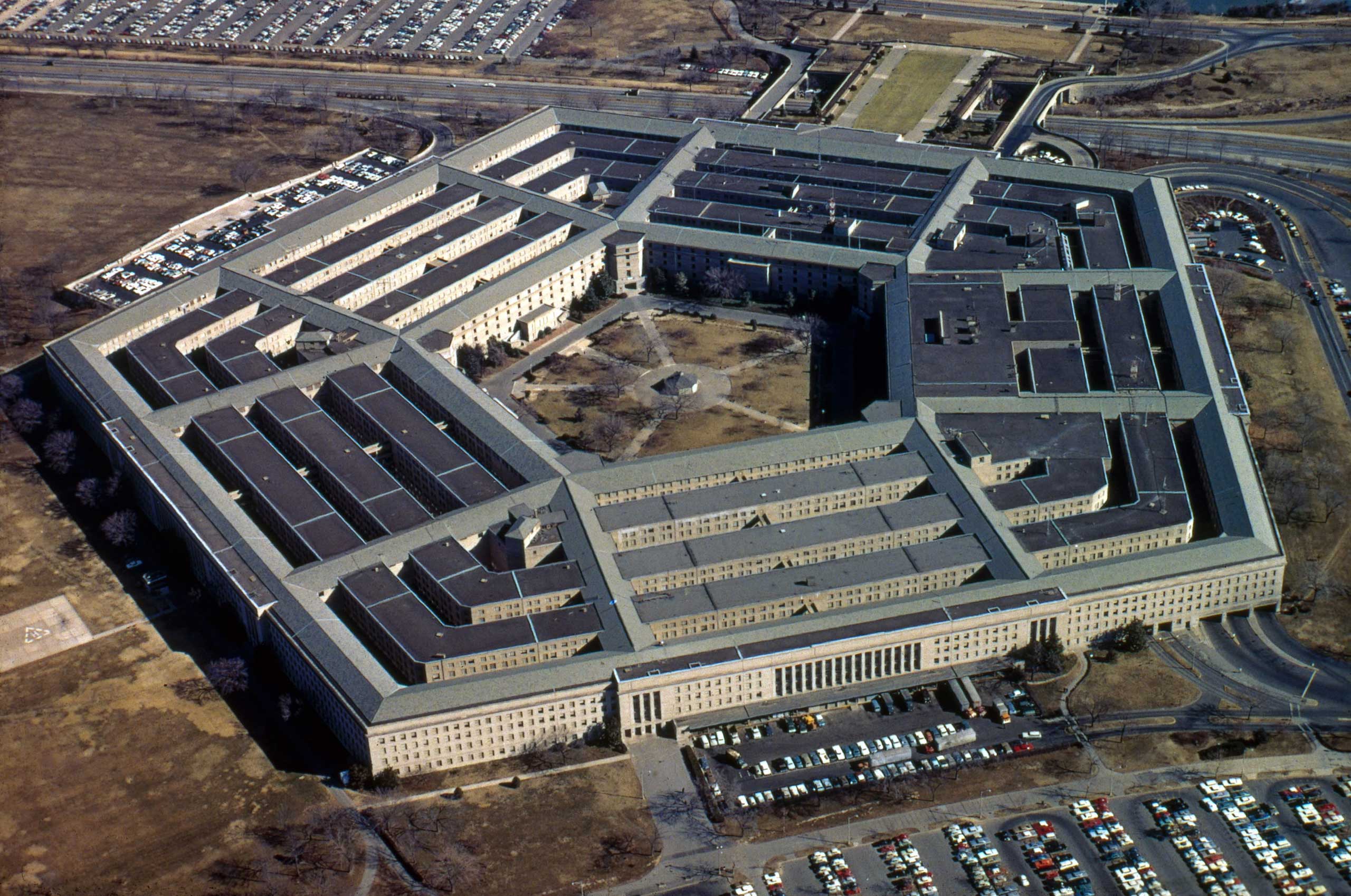

I couldn't. I don't want AI to be able to decide if my life should be ended, so I don't think it should be given that power over anyone. It seems that military commanders share my opinion and refuse to trust AI without extensive human oversight, but the Pentagon and DARPA (Defense Advanced Research Projects Agency) have initiated a new AI program to change that.

In a Washington conference celebrating the 60th anniversary of DARPA, they revealed that they have allocated $2 billion to develop military AI over the next 5 years. While this isn't much by DARPA standards, it's a lot for AI and will bring about some serious improvements. It's the most spent on AI yet, but it's only one of over 25 concurrent AI programs DARPA is running. In July they revealed that defense contractor Booz Allen Hamilton received $855 million for a 5-year unspecified AI-related project and that DARPA would be granting up to $1 million per research group that can improve complex environment recognition in AI.

The main target is to create an Artificial Intelligence that can explain its choices to an overseer so that it can prove that it is using common sense and has a digital 'moral compass'. DARPA also aims to tackle concerns that AI is unpredictable and prove that it can account for unexpected variables the way a human can. Currently, the AI in use can only give a "confidence rating" as an error percentage, and they are not permitted to fire without a human signing off.

This move could be a successor of Project Maven - an initiative by the Pentagon to improve object and environment recognition in military situations. Google was their primary partner but after an outcry from Google employees afraid they were creating software that could one day be used to kill people, Google executives decided against renewing the contract.

The Rand Corporation, another Pentagon contractor, has also voiced its concerns. They have particularly emphasized the impact of AI on nuclear warfare, highlighting that AI could possibly circumvent certain foundations of the theory of mutually assured destruction. Most notably, they claim that in the future AI could be used to predict the exact location of mobile Inter-Continental Ballistic Missiles and use conventional weapons to destroy a foreign power's nuclear arsenal. The only solution for the foreign power is an immediate attack, thus initiating nuclear war.

So far, the government has not responded to these concerns. Instead, the Trump administration has begun constructing a Joint Artificial Intelligence Center to coordinate AI research across the Department of Defense.

Ron Brachman, who was previously in command of DARPA's AI research, said during the conference that "we probably need some gigantic Manhattan Project to create an AI system that has the competence of a three-year-old." We'll simply have to wait and see if Trump wants to invest that much money, time and energy into AI - or if Putin will do it first.