Every few years, it seems like there's an amazing new technology with the promise of making games look ever more realistic. Over the decades, we've had shaders, tessellation, shadow mapping, ray tracing – and now there's a new kid on the block: path tracing.

So if you're looking for the skinny on this latest development in graphics technology, you've come to the right place. Let's dive into the world of rendering and follow a path of light and learning.

What is path tracing?

The short and sweet answer to this question is... "path tracing is just ray tracing." The equations for modeling the behavior of light are the same, the use of data structures to accelerate the searching for ray-triangle interactions are the same, too, and modern GPUs use the same units to accelerate the process. It's also very computationally intensive.

But, wait. If it's really the same, why does path tracing have a different name, and what benefit does it offer game programmers? Path tracing differs from ray tracing in that instead of following lots of rays, throughout an entire scene, the algorithm only traces the most likely path for the light.

Path tracing differs from ray tracing in that instead of following lots of rays, throughout an entire scene, the algorithm only traces the most likely path for the light.

We've explored how rays works (see: A Deeper Dive: Rasterization and Ray Tracing), but a brief whiz through the process is required here. The frame starts off as normal: the graphics card renders all of the geometry – all of the triangles that make up the scene – and saves it to memory.

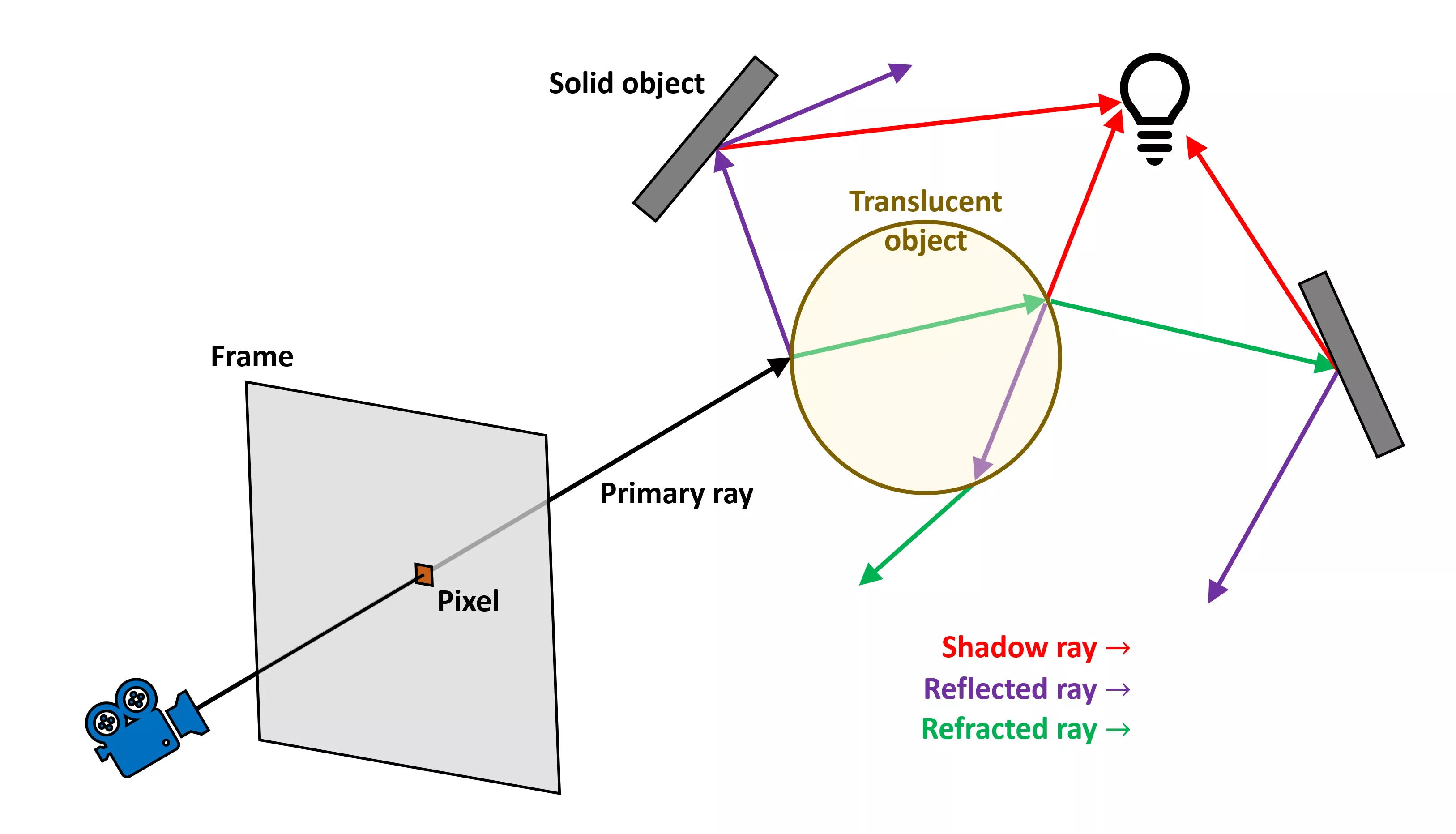

After a little bit of further processing, to organize the information in such a way that the geometry can be searched quicker, the ray tracing kicks in. For each pixel that comprises the frame, a single ray is cast from the camera, out into the scene.

Well, not in a literal sense – a vector equation is generated, with parameters set on the basis of where the camera and pixel are. Each ray is then checked against the scene's geometry and this is the first part of ray tracing's complexity. Fortunately, the latest GPUs from AMD and Nvidia come with dedicated hardware units to accelerate this process.

If a ray and object do interact, another calculation is done to work out exactly what triangle in the model is involved, and the color of the triangle will effectively modify the color of the pixel.

But light rarely hits an object and that light gets completely absorbed. In reality, there's a lot of reflection and refraction going on, so if you want the most realistic rendering possible, new vector equations are generated, one each for the reflected and refracted rays.

In turn, those rays get traced until they also hit an object, and the sequence continues until a chain of rays is finally bounced all the way back to a source of light in the scene. From the original primary ray, the total number of rays traced across the scene grows exponentially with every bounce.

Rinse and repeat across all of the other pixels in the frame, and the end result is a realistically lit scene... although a fair bit of additional processing is still needed to tidy up the final image.

But even with the most powerful of GPUs and CPUs, a fully ray traced frame takes an enormous amount of time to make – far, far longer than a traditionally rendered one, using compute and pixel shaders.

Now this is where path tracing fits into the picture, if one pardons the pun.

When more work means less work

The initial concept of path tracing was introduced by James Kajiya way back in 1986, while he was a researcher for Caltech. He showed that the problem of having a processor being ground to halt, working through an ever increasing number of rays, could be solved through the use of statistical sampling of scene (specifically, Monte Carlo algorithms).

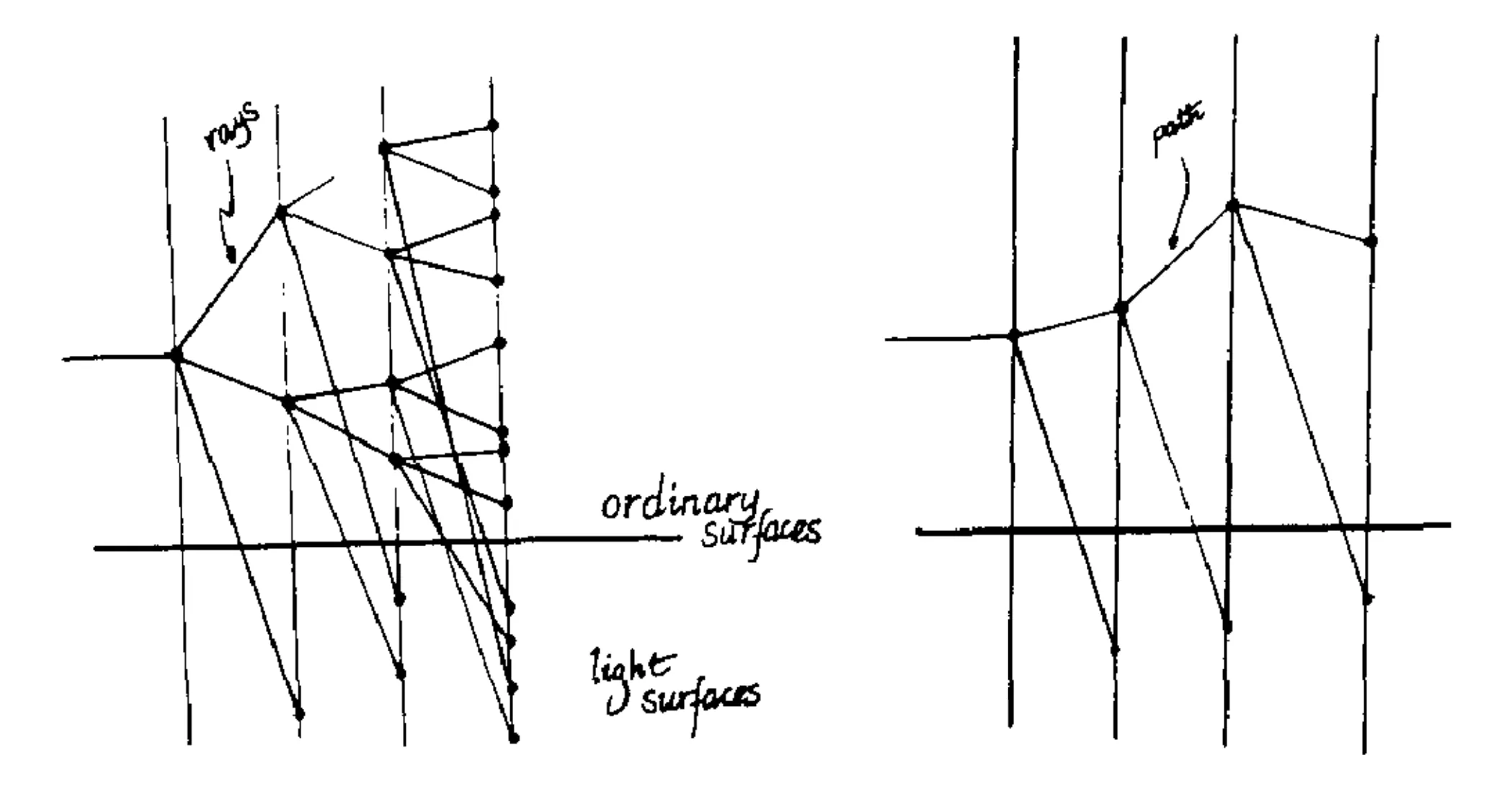

Traditional ray tracing involves calculating the exact path of reflection or refraction of each ray, and tracing them all the way back to one or more light sources. With path tracing, multiple rays are generated for each pixel but they're bounced off in a random direction. This gets repeated when a ray hits an object, and keeps on occurring until a light source is reached or a preset bounce limit is reached.

This in itself probably doesn't seem like a huge change in the amount of computing required, so where's the magic part?

Not all of the rays will be used to create the final color of the pixel in the frame. Only a certain number of them will be sampled and the algorithm uses results in an almost ideal path of light bounces, from camera to light source. One can then scale the number of samples for each pixel, to adjust the accuracy of the final image.

Despite an extra pile of math and coding being involved, the end result is that there are far fewer rays to process, even though path tracing typically fires off dozens of rays per pixel. Tracking rays and carrying out their interaction calculations are the reason for the performance hit, in comparison to normal rendering, so using fewer rays to color a pixel is clearly a good thing.

But here's the really clever part: ordinarily fewer rays would result in a less realistic lighting, but since the bulk of the color of the frame's pixels are only affected by the primary rays, dumping most or all of the secondary ones doesn't affect things as much as one might think.

Now, if the scene does contain lots of surfaces that will reflect and refract light, such as glass or water, then those secondary rays do become important. To get around this problem, either the algorithm is tweaked to account for the distribution of ray types one should be getting in a scene, or those specific surfaces are handled in their own 'fully ray traced' rendering pass.

A good developer will use the full gamut of rendering tools at their dispense: rasterization with shaders, path tracing, and full ray tracing. It's a lot more work to figure this all out, but it's ultimately less work for the hardware to deal with.

Why is path tracing in the news right now?

Several times over the past few years we've seen headlines about mods adding ray tracing to old classics, but most of those actually refer to path tracing. We heard it back in 2019 with an experimental mod for Crysis and Quake 2, or more recently with the unofficial Half-Life ray tracing mod, the Classic Doom mod, Descent, and many others. All path tracing.

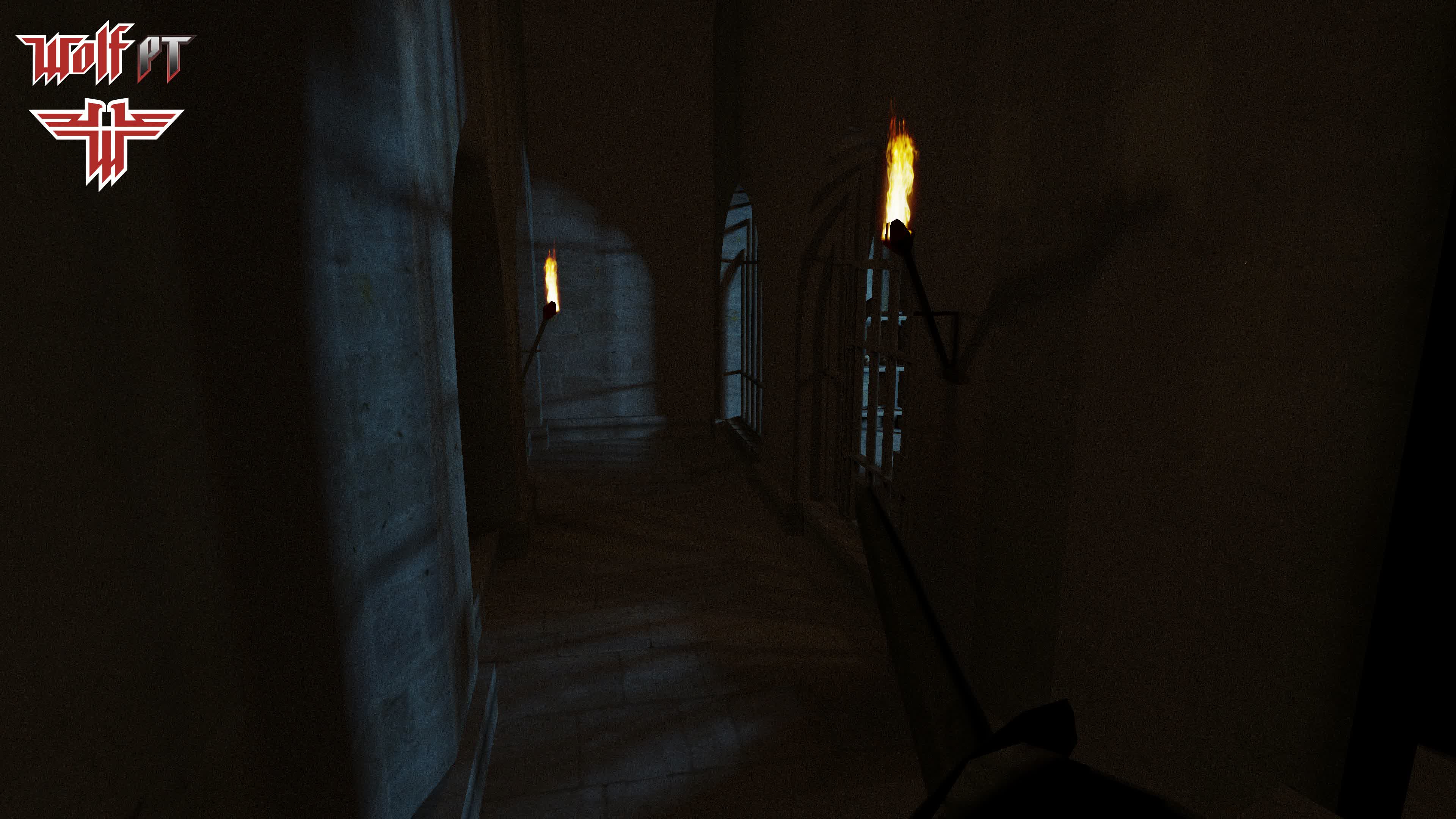

There was also the tweet from a senior R&D graphics engineer at AMD, who announced his project to update the original Return to Castle Wolfenstein game with a path traced renderer. See below:

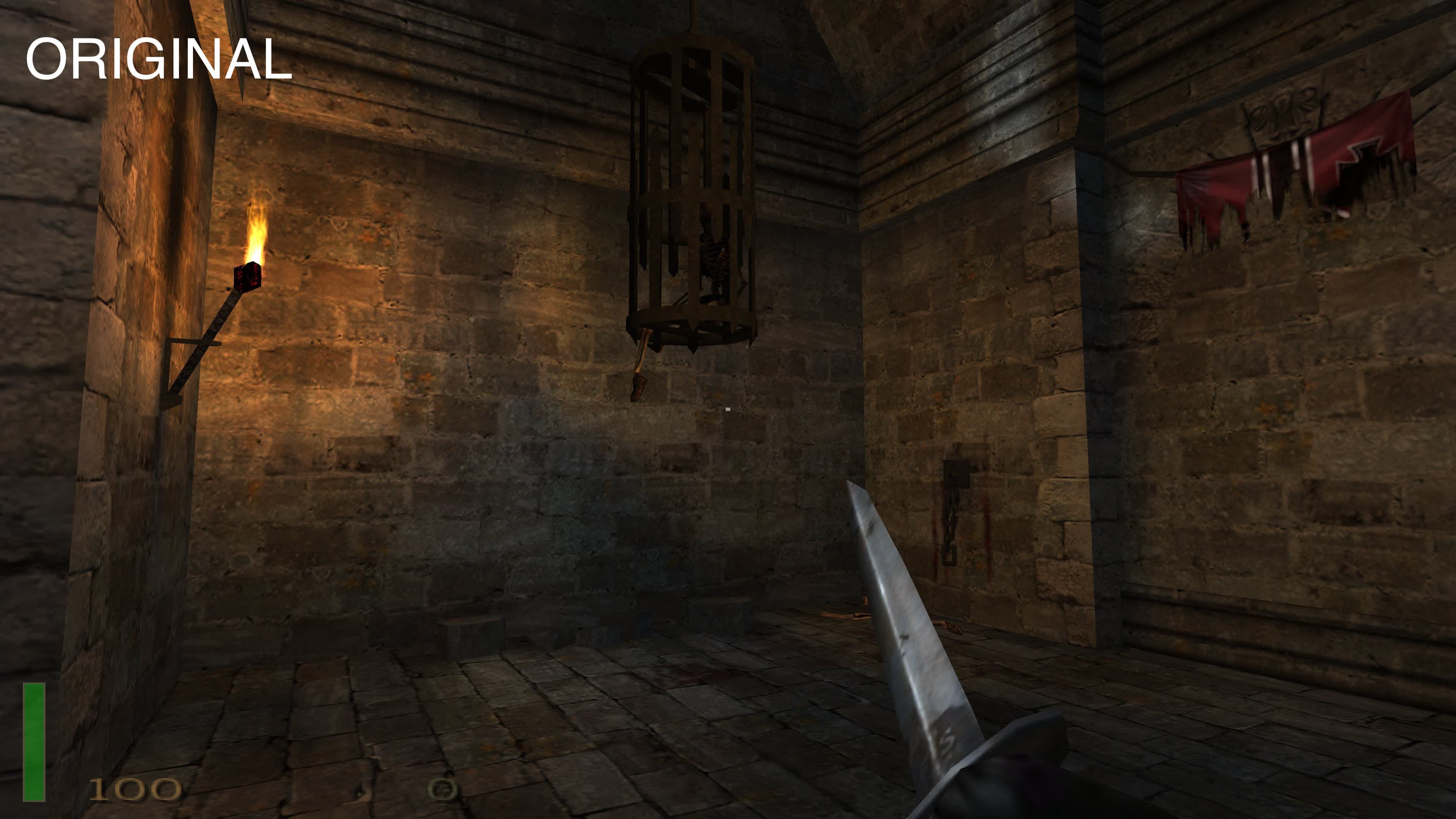

Sample 1

After, using path tracing...

Sample 2

Using path tracing...

Sample 3

As noted above, Nvidia's Quake II remaster sporting a ray traced renderer was launched to help promote RTX technology. This was initially the work of one person, Christoph Schied, who created the remaster (technically known as Q2VKPT) as part of a research project. With contributions from other experts in graphics technology, Quake II RTX was born, and was the first renown game to use path tracing for all its lighting.

The original models and textures are still present and the only aspect that was changed was how surfaces were lit and shadows generated. Static images aren't the best medium for demonstrating how effective the new lighting model is, but you can grab a free copy for yourself, or check out this video...

Bandying cools phrases such as stochastic multiple importance sampling and variance reduction algorithms, the project highlighted two things: first of all, path tracing looks seriously cool, and secondly, it's still seriously tough on developers and hardware alike. If you want to see just how complex the math is, have a read of Chapter 47 of Ray Tracing Gems II.

But where the likes of Quake II RTX shows what can be achieved path tracing everything, the likes of Control and Cyberpunk 2077 demonstrate that incredible graphics are achievable through blending all methods of lighting and shadowing – rasterization and shaders still rule the roost, with ray tracing for reflections and shadows.

So we're still some way off before we see all games rendered using nothing but path tracing.

Following the path to a better future

Despite its relatively newness in the world of real-time rendering, path tracing is definitely here to stay. We've already seen the results in the one game and path tracing is already heavily used in offline renders, such as Blender, as well as the movie industry, with the likes of Autodesk Arnold and Pixar's RenderMan.

There are no signs of GPUs nearing any kind of limit to their maximum computing power just yet, so while ray tracing, traditional or path tracing, is still very demanding, more powerful hardware will come to market over the years.

All of which means that developers of future PC games are certainly going to explore any rendering technique that produces amazing graphics at an achievable performance, and path tracing has the potential to do just that.

There are consoles to consider, too. The Xbox Series X and PlayStation 5 both offer support for 'traditional' ray tracing, but given that their GPUs will be relatively outdated in just a few years time, developers will be looking to make use of every possible shortcut to squeeze the last dregs of power out of those machines, before moving on to the next generation of consoles.

So there you have – path tracing, the fast cousin of ray tracing. Looks almost as good, works a heck of a lot quicker. Given the continued advances of home PC and console graphics technology and performance, it won't be long before we'll see computer graphics from the latest blockbusters in our favorite games, too.