The potential risks that unchecked artificial intelligence can pose to humanity are many, and the tech industry has been grappling with a way to mitigate those possibilities for some time.

Now, it seems Intel and the car industry at large (including the likes of BMW, Audi, Daimler, and Fiat Chrysler) have come up with such a solution for one part of the AI world: self-driving cars.

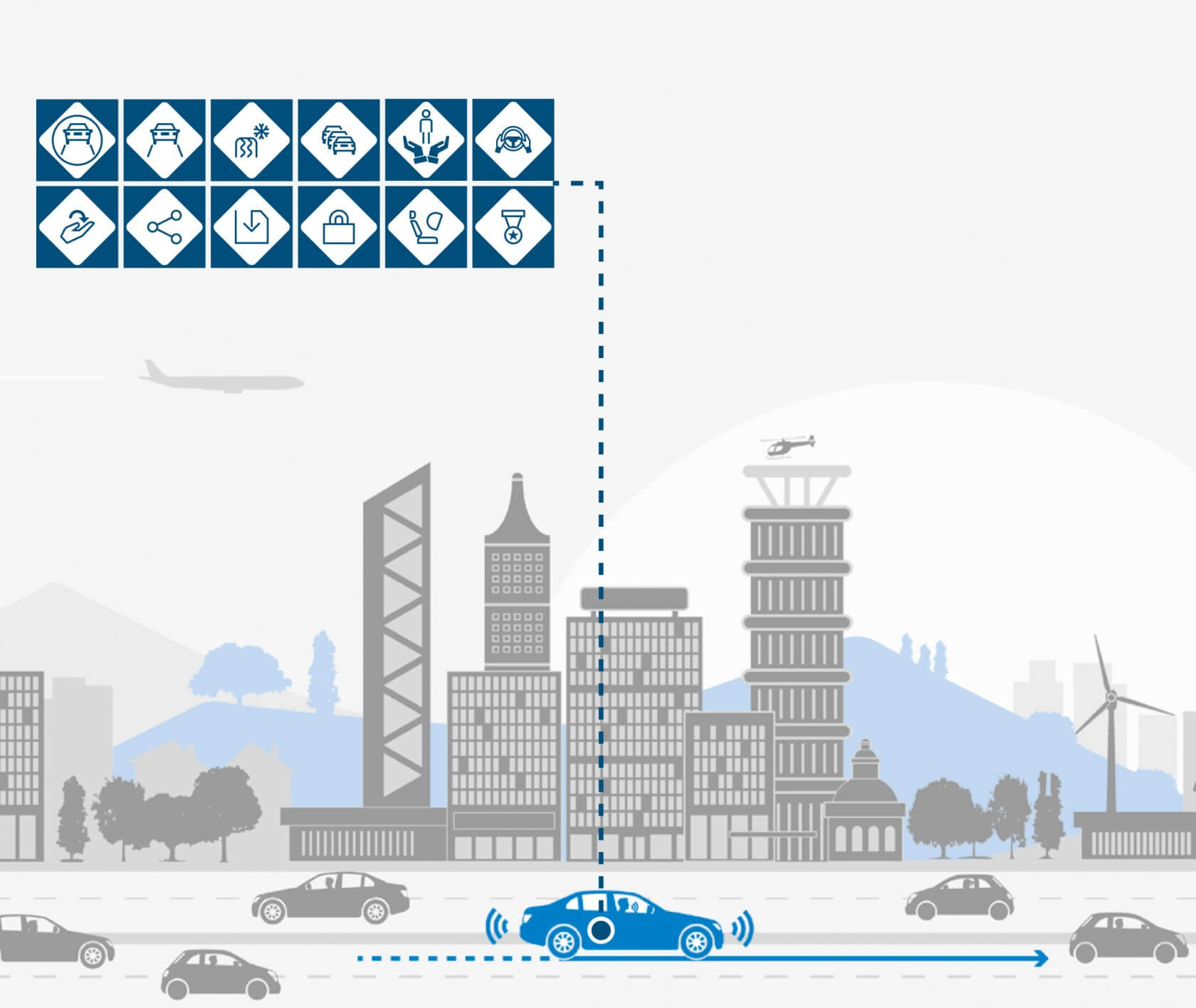

Intel and co. have published the first version of their "Safety First for Automated Driving" paper, which lays out 12 different hopefully-universal principles that all self-driving vehicles should adhere to moving forward. We'll list the principles below, but there are far too many details for each to reasonably cover here – you'll want to read the full paper for that. The principles are listed on pages six through 10.

Regardless, the suggested "Twelve Principles of Automated Driving" are Safe Operation, Operational Design Domain, Vehicle [Operator]-Initiated Handover, Security, User Responsibility, Vehicle-Initiated Handover, Interdependency Between The Vehicle Operator and the Ads, Safety Assessment, Data Recording, Passive Safety, Behavior in Traffic, and Safe Layer.

Combined, these principles aim to blend user and vehicle responsibility, ensuring that a driver knows what's expected of them at all times – for example, explicitly informing them when a manual take-over is necessary – while preventing the vehicle's autonomous systems from putting drivers in harm's way in the first place.

It remains to be seen whether or not these principles will be adopted by every carmaker, but given the many controversies that have surrounded self-driving cars over the past couple of years, self-regulation like this will probably seem preferable to government intervention.