Bottom line: "A novice painter might set brush to canvas aiming to create a stunning sunset landscape --- craggy, snow-covered peaks reflected in a glassy lake --- only to end up with something that looks more like a multi-colored inkblot." Nvidia has created a tool that can turn the simplest drawings into photo-realistic images.

We've already seen deep-learning models that can create lifelike looking faces. On Monday, Nvidia Research unveiled a deep-learning model it developed that can create realistic landscapes from rough sketches. The tool is called GuaGAN, a tongue-in-cheek nod to post-impressionist painter Paul Gauguin.

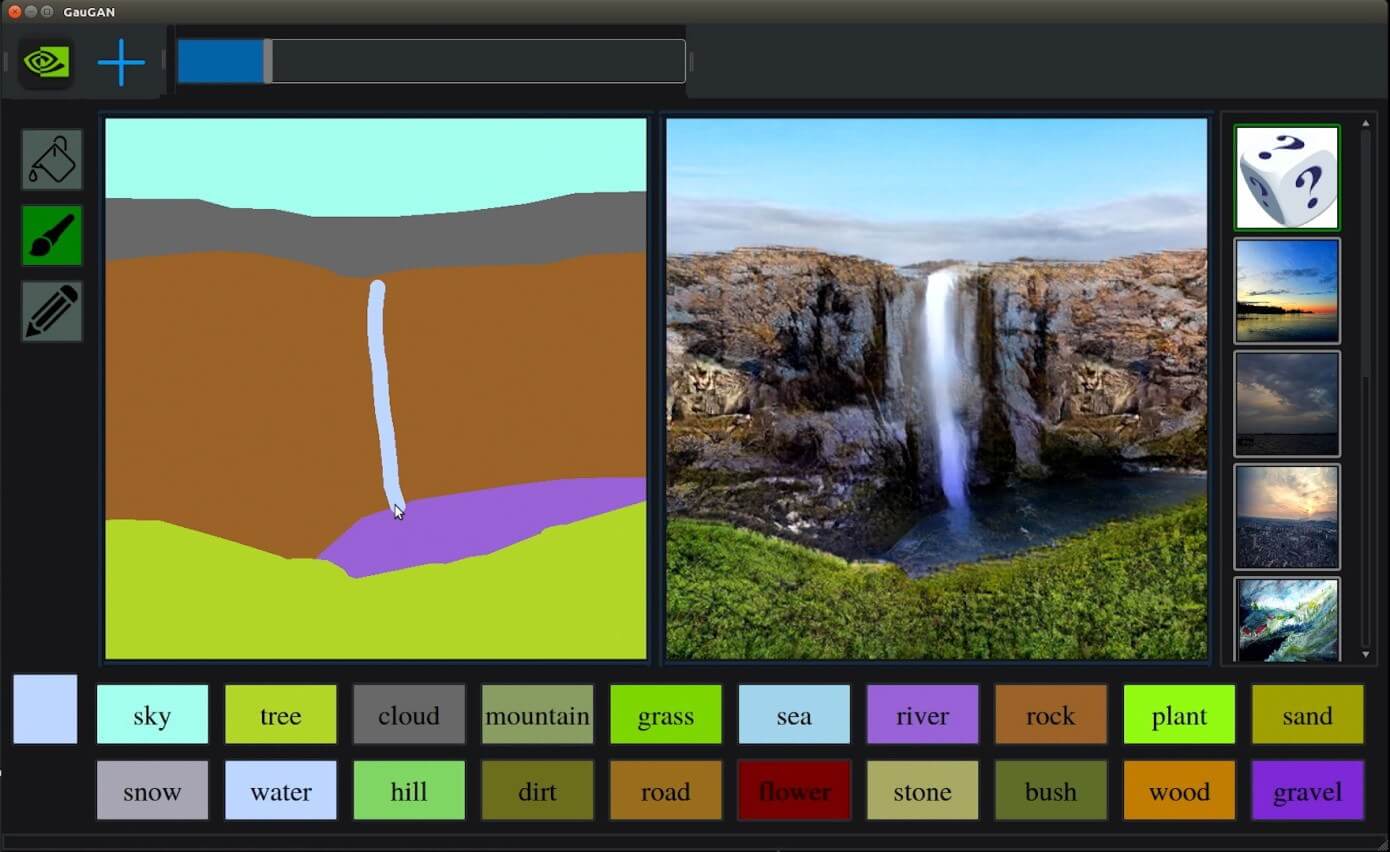

As its name indicates, GuaGAN uses a generative adversarial network (GAN) to convert "segmentation maps" into photo-realistic images. Segmentation maps are simply crude drawings used to divide the canvas into portions. The segments can then be labeled with tags like sand, rock, water, sky, and so forth, which the GAN uses to create a detailed picture.

"It's much easier to brainstorm designs with simple sketches, and this technology is able to convert sketches into highly realistic images," said Nvidia's Bryan Catanzaro, vice president of applied deep learning research.

The software isn't perfect - if you scrutinize the results you'll find gaps between some objects, making them less-than-photorealistic. These gaps are a shortcoming of current neural networks, and projects like GauGAN hope to improve on them.

The software has three tools - a paint bucket, a pencil, and a pen. Below the tools are a series of object buttons. Users select one of the object buttons and then roughly sketch the object they selected. GauGAN then turns the user's sketch into a photorealistic version of the object.

It is not just pasting together pieces of images mind you, but instead creating an original picture pixel by pixel. A generator network creates elements and presents them to a discriminator, which tells the generator how to improve the image using over a million images on Flickr's Creative Commons as a reference.

Interestingly, when an element is changed in the segmentation map, the GAN will dynamically change other segments to match the new scene. For example, if you turn a Grass tag to Snow, the GAN will know that it has to alter the appearance of the sky as well. It also knows when to add reflections to water or that a vertical line with a water tag is supposed to be a waterfall.

"It's like a coloring book picture that describes where a tree is, where the sun is, where the sky is," said Catanzaro. "And then the neural network is able to fill in all of the detail and texture, and the reflections, shadows, and colors, based on what it has learned about real images."

To create these real-time results, GauGAN must be run on a Tensor platform, meaning only the latest GPUs from Nvidia would be compatible. Catanzaro sees applications in architecture, urban planning, landscape design, game development, and more.

Nvidia will be presenting its research at the CVPR conference in June. However, those attending this week's GPU Tech Conference in San Jose, California can play with it themselves at the Nvidia booth. A public demo may eventually be available on their AI Playground.