The big picture: MIT researchers are the first to experiment with using neural networks to reveal invisible objects in photos taken in the dark. Their work could one day lead to safer medical and lab imaging as well as improved astrophotography.

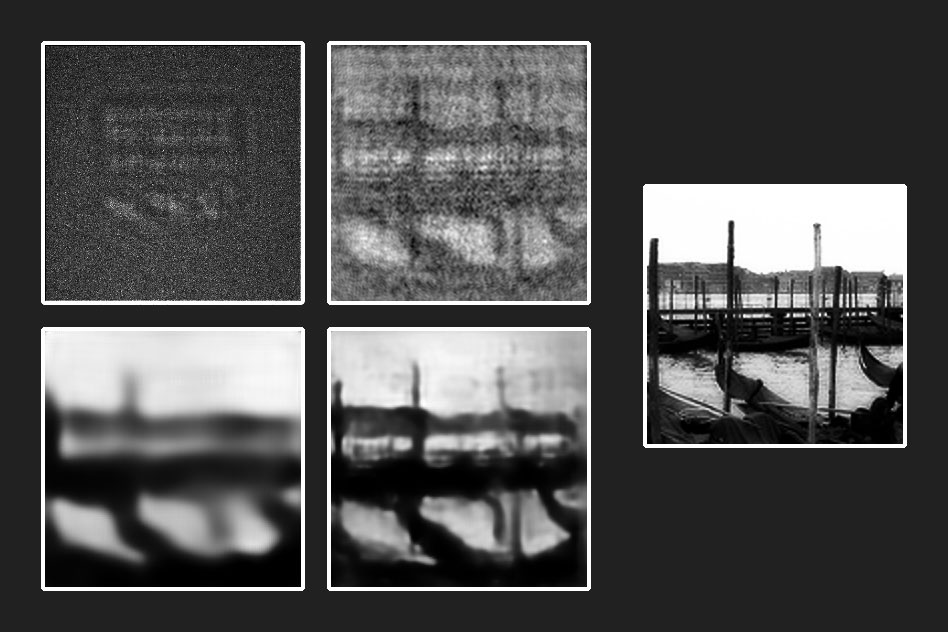

Researchers at the Massachusetts Institute of Technology (MIT) have trained a deep neural network to reconstruct objects from images taken in near pitch-black conditions. The technique results in a reconstructed pattern that is "more defined than a physics-informed reconstruction of the same pattern, imaged in light that was more than 1,000 times brighter."

The team fed the neural network more than 10,000 images of transparent glass-like etchings snapped in extremely low lighting conditions (about one photon per pixel).

"When we look with the naked eye, we don't see much --- they each look like a transparent piece of glass," said Alexandre Goy, lead author on the study published in Physical Review Letters. "But there are actually very fine and shallow structures that still have an effect on light."

After training the neural network, the team created a new pattern that wasn't part of the original data set and fed it to the system. The results show that "deep learning can reveal invisible objects in the dark," Goy said.

George Barbastathis, professor of mechanical engineering at MIT, noted that blasting biological cells in the lab with light can burn them and leave nothing to image. Furthermore, exposing patients to X-rays can increase their likelihood of getting cancer. With this technique, they've demonstrated that it's possible to get the same image quality albeit through far less exposure to light or X-rays.

The technique could also be used to advance astronomical imaging, we're told.

Image courtesy Konstantin Shaleev, Shutterstock