The big picture: AI is trendy, but making real world advances that will be noticeable in consumer products. Nvidia's latest Tesla T4 GPU will help bring down costs of services relying on cloud-based AI systems by offering better performance with relatively low power consumption.

Nvidia has launched a new AI data center platform using new Tesla T4 GPUs. The TensorRT Hyperscale Inference Platform is designed to accelerate inferences made from voice, images, and video.

Arriving with 320 Turing Tensor Cores and 2,560 CUDA cores, the Tesla T4 offers extensive number crunching abilities on a small PCIe form factor requiring only 75W. FP16 performance peaks at 65 teraflops, INT8 operations reach up to 130 teraflops, followed by INT4 at 260 teraflops. A standard 16GB of GDDR6 memory results in bandwidth exceeding 320 GB/s

When working with video, the T4 can decode up to 38 full-HD streams simultaneously. New dedicated hardware transcoding engines effectively double the performance in this use case over previous generation hardware.

Nvidia's goal with the new platform is to offer real time processing with reduced latency in end-to-end applications. Different types of neural networks are required to handle making inferences on input media, which is why accelerating all of them on one architecture is no easy feat.

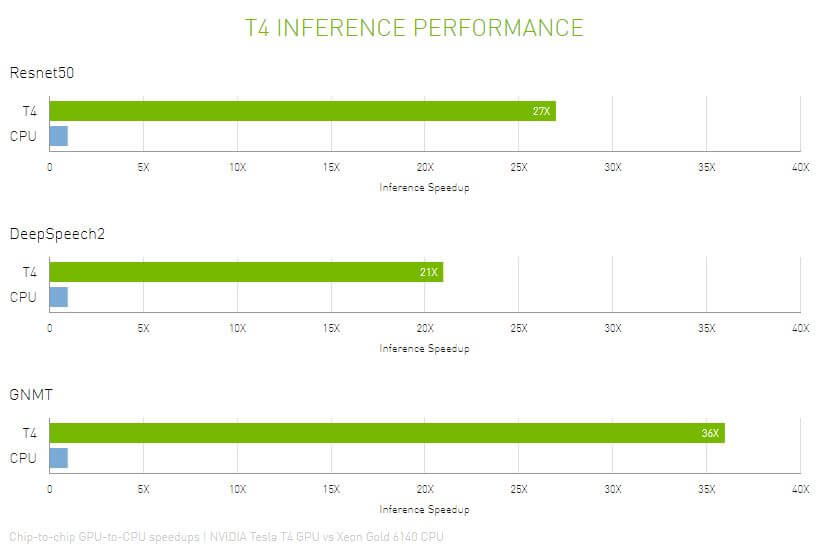

Tesla T4 GPUs are able to handle more than 40 times the number of queries compared to traditional CPUs by themselves when working with AI inference software. Note that Nvidia's comparison is to an 18-core Xeon Gold that retails north of $2,000.

Over the next five years, Nvidia has estimated that the AI inference market will reach $20 billion. Some customers helping Nvidia to realize that potential include Microsoft, Google, HPE, Dell EMC, Cisco, and IBM Cognitive Systems.

Early access to Tesla T4 GPUs on Google Cloud Platform is available upon request following review. Other major cloud providers will be implementing Nvidia's new GPUs shortly.