Moderation is not an easy thing for social media platforms to get right. With millions of users actively publishing image, video, and text posts every hour, manually reviewing the site's content is off the table.

That's why platforms like YouTube, Facebook, and Twitter have turned to algorithms and artificial intelligence to help police their platforms. The results of these AI-powered moderation tools have been mixed – just ask your favorite YouTuber how they feel about the website – but it's clear that there isn't really a better solution for the time being.

However, Facebook may have found a way to at least make its moderation tools more accurate. In a blog post published today, three company employees explain a new tool Facebook has already begun testing in the wild: Rosetta.

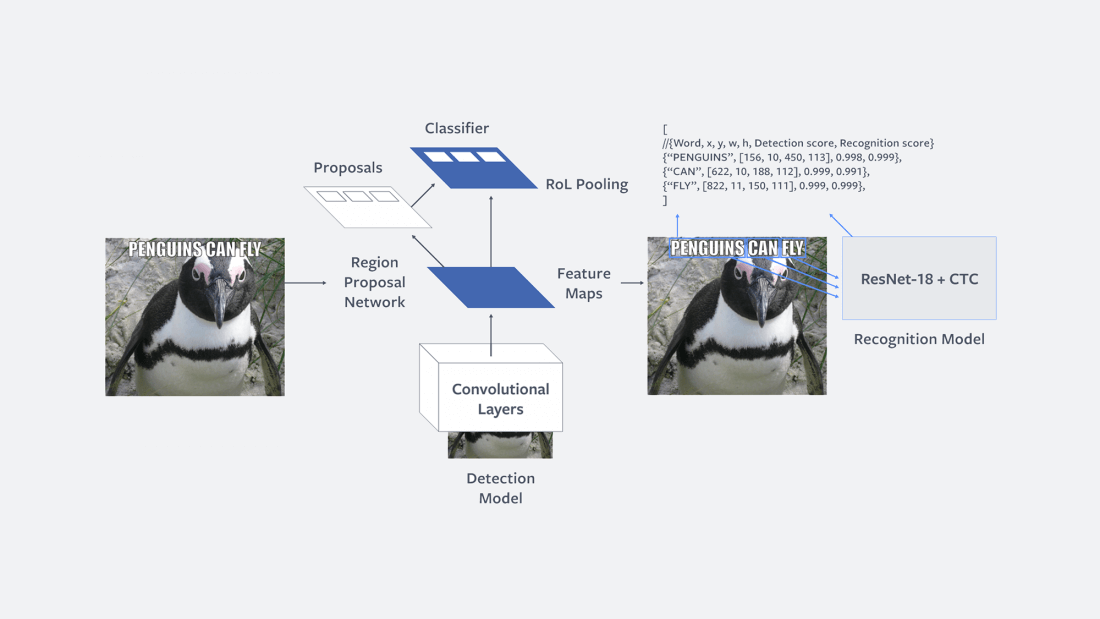

Rosetta is a "large-scale" machine learning system designed to extract text from public Facebook and Instagram images and videos (in a "wide variety" of languages), and determine the context of the text and image together.

In other words, Facebook is developing an AI that can understand memes.

That may sound like a pretty ridiculous concept at first, but Facebook isn't doing this for the fun of it. Rosetta's primary purpose seems to lie in the detection of content that violates the tech giant's "hate-speech" policies, which may otherwise fly under the radar.

However, Rosetta could have further uses down the line. In addition to offering enhanced moderation, it can improve the accuracy of personalized News Feed content, while also boosting the "relevance and quality" of on-site image search results.

Rosetta will almost certainly be a controversial tool for certain members of the meme-loving public, but here's hoping the technology will prove smart enough to distinguish between silly-but-harmless content and truly offensive imagery.