Forward-looking: Fake news - or rather, the spread of fake news - has evolved into a major issue in a relatively short amount of time. Even with its proliferation, many people can pick up on context clues to help sniff out bogus propaganda. With photorealistic videos, that's going to be exponentially more difficult to do. In other words, seeing is no longer believing.

Fake news is about to get a whole lot more convincing. Machine-learning experts have developed a neural network that enables the photo-realistic re-animation of portrait videos with stunning accuracy.

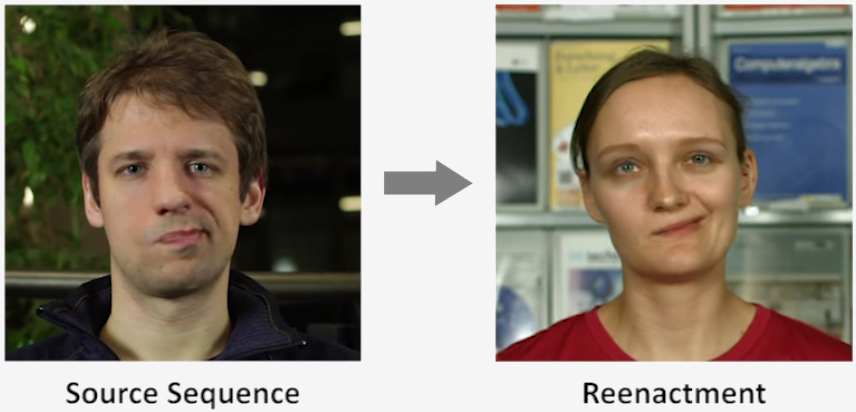

In a paper titled Deep Video Portraits, researchers note that existing approaches are restricted to manipulations of facial expressions. Their new approach, however, transfers the full 3D head position, face expression, head rotation, eye blinking and eye gaze from a source to its target.

You may be familiar with the term "deepfakes" which is often associated with the act of superimposing existing images or video onto source images or videos. The technique grew in popularity in recent memory as it was used to create fake celebrity pornography (plastering a celeb's head on an adult movie star's body).

These "deep video portraits" are in the same wheelhouse as deepfakes albeit way more convincing (technically, they're also quite a bit different but the goal - visual trickery - is the same).

The mind immediately jumps to all the nefarious acts that this technology could enable and it's a valid concern. Justus Thies, co-author of the paper and a postdoctoral researcher at the Technical University of Munich, told The Register that he's aware of the ethical implications of re-enactment projects.

Yet for all of the potential dangers associated with using AI to create fake videos, there are also some practical applications that could benefit from the technology. As highlighted in the researchers' video, applying the technique to the dubbing of foreign language movies results in a significantly better product.

It's not perfect, however, as drastic changes in facial expression or head poses can lead to artifacts. But with a still subject and a static background, the untrained eye is going to have a hard time detecting anything out of the ordinary.