A team of researchers from Nvidia have developed a new deep learning technique that allows robots to be taught to mimic human actions just by observing how people perform certain tasks. This technology can greatly reduce the amount of time it takes to program robots to perform their desired workloads.

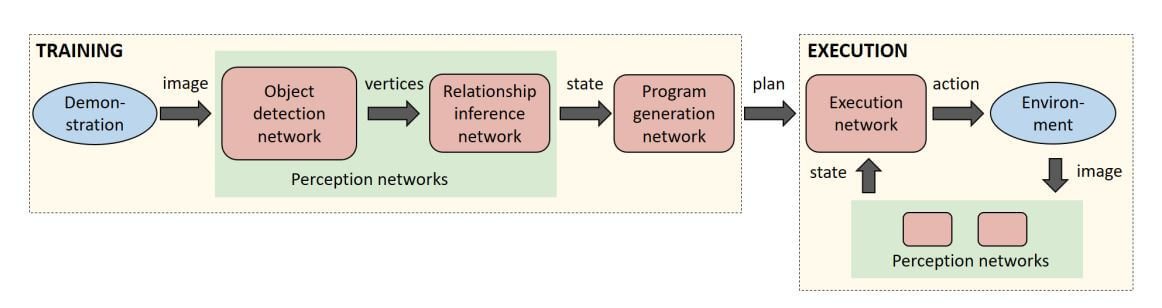

One of the most impressive parts of Nvidia's research is that a human only needs to perform a task one time for the robot to learn how to repeat the actions. A video camera streams a live feed to a pair of neural networks that handle object recognition.

Then, the location data is handed off to another network to manage how the locations of movable objects change over time. A final network plans the robot's movements and attempts to account for any potential interference from the environment. A human-readable list of steps to be taken is generated and allows for a human to manually correct any errors in the process.

Running all of those neural networks takes some serious compute capabilities, requiring Titan X GPUs. Each frame of video is processed as a still image so that the exact camera used does not matter to the AI system.

Nvidia has published its full research paper and will be presenting it at the International Conference on Robotics and Automation in Brisbane, Australia this week.

The team behind this demonstration will continue to research and develop new capabilities for more scenarios aside from block stacking. One potential use is teaching industrial automation robots how to assemble products by watching human operators.