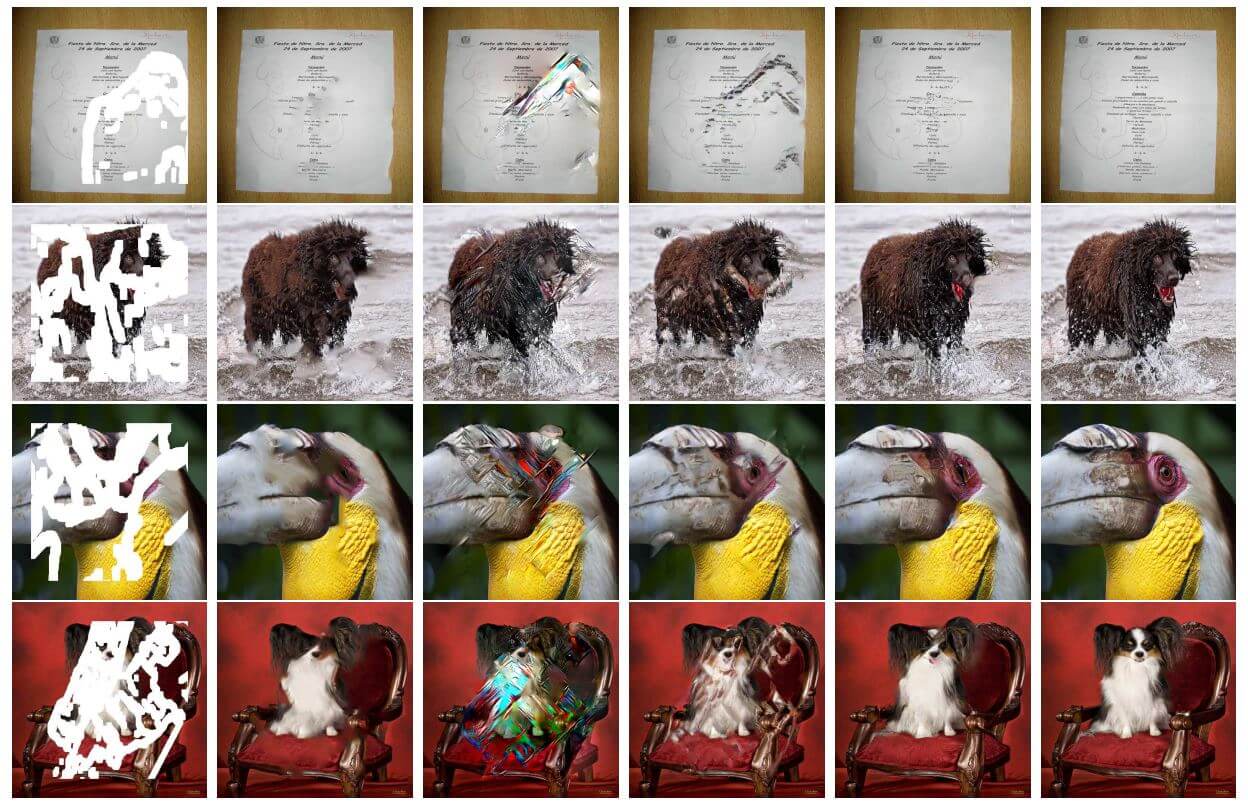

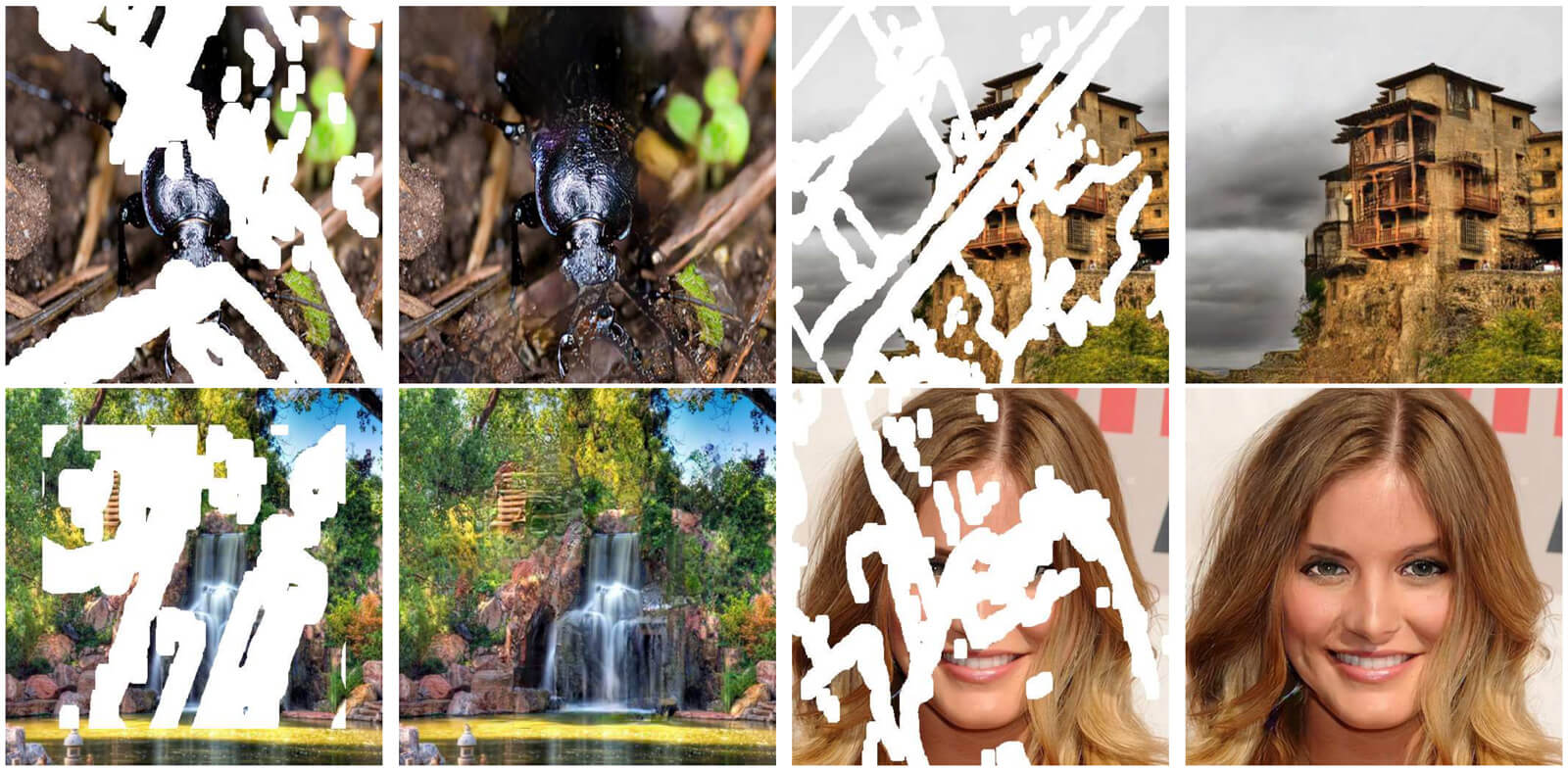

Retouching images is often synonymous with Adobe Photoshop but Nvidia holds the hardware required for advanced manipulation techniques. Using an enhanced deep learning technique, "image inpainting" allows for sections of images to be removed and filled with realistic software-generated infill.

Although Photoshop's Content-Aware Fill feature that appeared back in CS5 may come to mind, Nvidia's research project goes well beyond sampling surrounding areas and edge detection. A neural network was built and trained using over 55,000 images containing random holes and streaks with an additional 25,000 images generated to test its abilities.

Six different categories of masks were created with random patterns to help improve the accuracy of image reconstruction efforts. Tesla V100 GPUs were used with PyTorch deep learning framework to accelerate the neural network training process.

Previous attempts at image inpainting are at a disadvantage compared to Nvidia's new methodology because there is a strong dependency on the input to algorithms that calculate the values of missing pixels. This dependency causes artifacts and blurriness. To remedy the problem, Nvidia researchers use a partial convolution layer that repeatedly checks for how well the output pixels match the receiving area.

Another key advantage of Nvidia's solution is that holes needing to be filled can be any shape. Traditional attempts at image reconstruction have struggled to work with non-rectangular data sets. "To the best of our knowledge, we are the first to demonstrate the efficacy of deep learning image inpainting models on irregularly shaped holes," said an Nvidia researcher.

The research team will be looking at how partial convolution techniques can be applied to super-resolution applications. As 4K and higher resolutions are slowly becoming more affordable for the masses, the ability to upscale lower resolution content will become even more important.