Researchers at MIT have developed a chip capable of processing neural network computations three to seven times faster than earlier iterations. The silicon is able to pull off this feat while simultaneously reducing power consumption by as much as 95 percent, making it ideal for use in smartphones or perhaps even household appliances.

Neural networks are densely interconnected meshes of basic information processors that are taught to perform various tasks such as speech or facial recognition by analyzing massive sets of "training" data. Most neural nets are quite large and consume copious amounts of data, limiting where they can be used.

As MIT correctly highlights, most smartphone apps that use neural nets simply upload data to the cloud. The data gets processed remotely and the results are sent back to the handset for use. This method has its advantages - being able to utilize huge neural networks, for example - but also some shortcomings like the fact that you're sending potentially sensitive data to a third party or the latency of your Internet connection. If you happen to be in a network dead zone, well, I suppose you wouldn't be able to utilize the neural network at all.

Fortunately, brilliant minds like those at MIT are optimizing neural networks so they'll be practical to run locally.

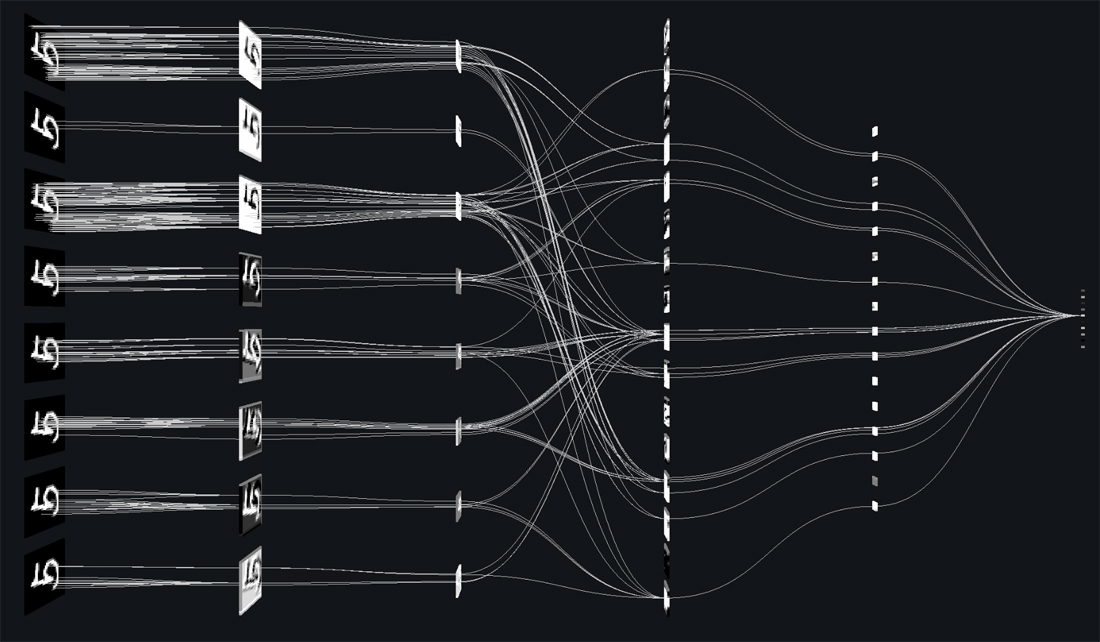

Avishek Biswas, the MIT graduate student who led the new chip's development, said the general processor model is that there is memory in some part of the chip and a processor in another part of the chip. Data moves back and forth between the two locations when computations are performed.

Because machine-learning algorithms require so many computations, Biswas continued, the transferring of data back and forth is responsible for most of the energy consumption. The algorithms' computations can be simplified down to one specific operation called the dot product. Their goal was to determine if the dot product functionality could be implemented inside the memory, thus eliminating the costly transfer of data back and forth between processors and memory.

The team's prototype is capable of calculating dot products for up to 16 nodes at a time instead of having to shuffle between a processor and a bank of memory for every computation. As you can imagine, this can save loads of time and drastically reduces energy consumption.

Despite a few shortcomings (the chip's accuracy was generally within two to three percent of a conventional network in testing), the prototype is promising. Dario Gil, vice president of artificial intelligence at IBM, said it will certainly open the possibility to employ more complex convolutional neural networks for image and video classifications in future IoT devices.

Several other major technology players are also working to shrink down neural networks. Huawei's Kirin 970 chipset, unveiled in September, includes a dedicated neural processing unit, as does the Exynos 9 Series 9810 SoC from Samsung. Apple's latest A11 Bionic chipset also features a dedicated AI chip.

Biswas is presenting his work at the International Solid State Circuits Conference this week.