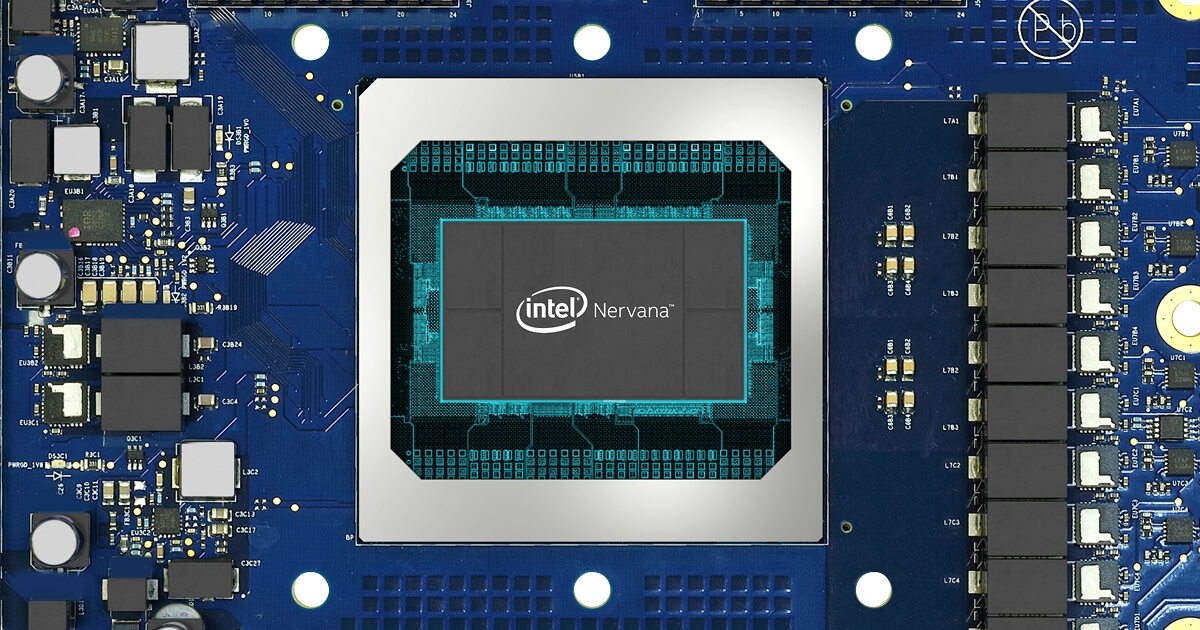

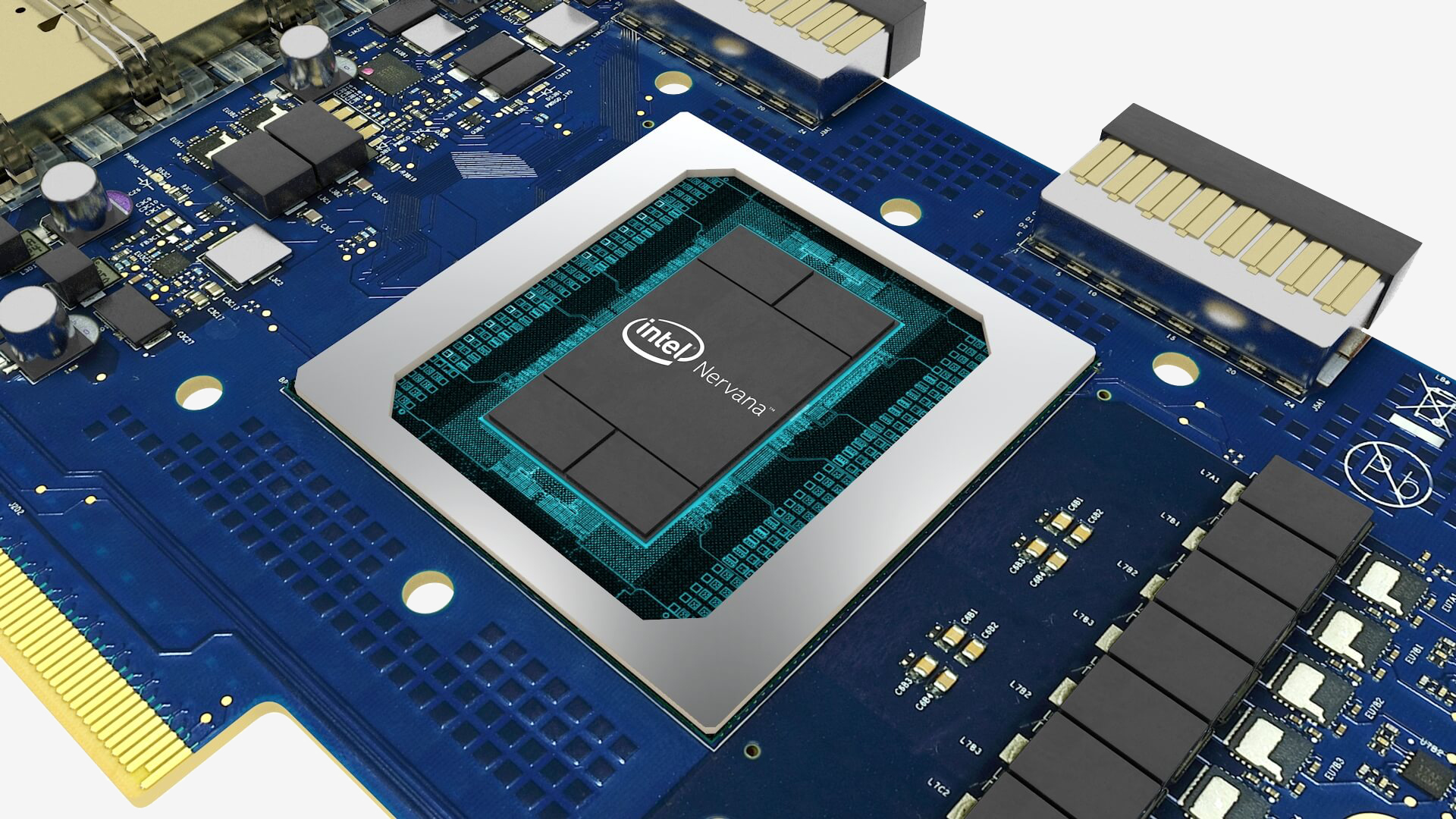

Earlier this year, Intel showed off its research and development efforts to build the Intel Nervana Neural Network Processor. The newly designed chip will far surpass any general-purpose processors for machine learning and artificial intelligence applications. Best yet, it is a product that actually is going to make it to market.

Carey Kloss, vice president of hardware for Intel's artificial intelligence products group, has given an update on refinements made to the Nervana architecture. First, understand the requirements of what a neural network processor (NNP) must do. Training a machine using a neural network requires massive amounts of memory and arithmetic operations to generate useful output.

Scaling capabilities, power consumption and maximum utilization are the primary considerations for Nervana's spatial architecture. In order to achieve maximum power savings, data should not be moved within the system unless absolutely necessary. Vector data is able to be split between memory modules so that needed data is always nearest where it is required.

With the implementation of high bandwidth memory (HBM), bandwidth exceeding 1TB/s is possible between on-die and external memory banks. Even though that is an impressive figure, memory bandwidth is still a limiting factor for deep learning workloads. Since Intel is unable to wait for new memory technologies to be developed, some other creative options have been developed.

Software control of memory use allows on-die memory to load information from external memory once and then shift the data in between local memory modules. Each module is approximately 2MB with a total of around 30MB per Nervana chip. Reducing reads to external memory helps prevent saturation of memory bandwidth and allows for pre-fetching of the next data set needed for operation.

An update to the Flexpoint data type allows for performance similar to 32-bit floating point operations using only 16-bits of storage. By using half the number of bits, the available memory bandwidth is effectively doubled. Flexpoint is also modular so that future generations of Nervana will be able to further reduce the number of bits Flexpoint operations require.

Communication between the chip and external components has been significantly improved to offer bidirectional, terabit-class performance. A cluster of Nervana chips can work on a single task as if the cluster were a single massive processor due to the high-speed communication between chips.