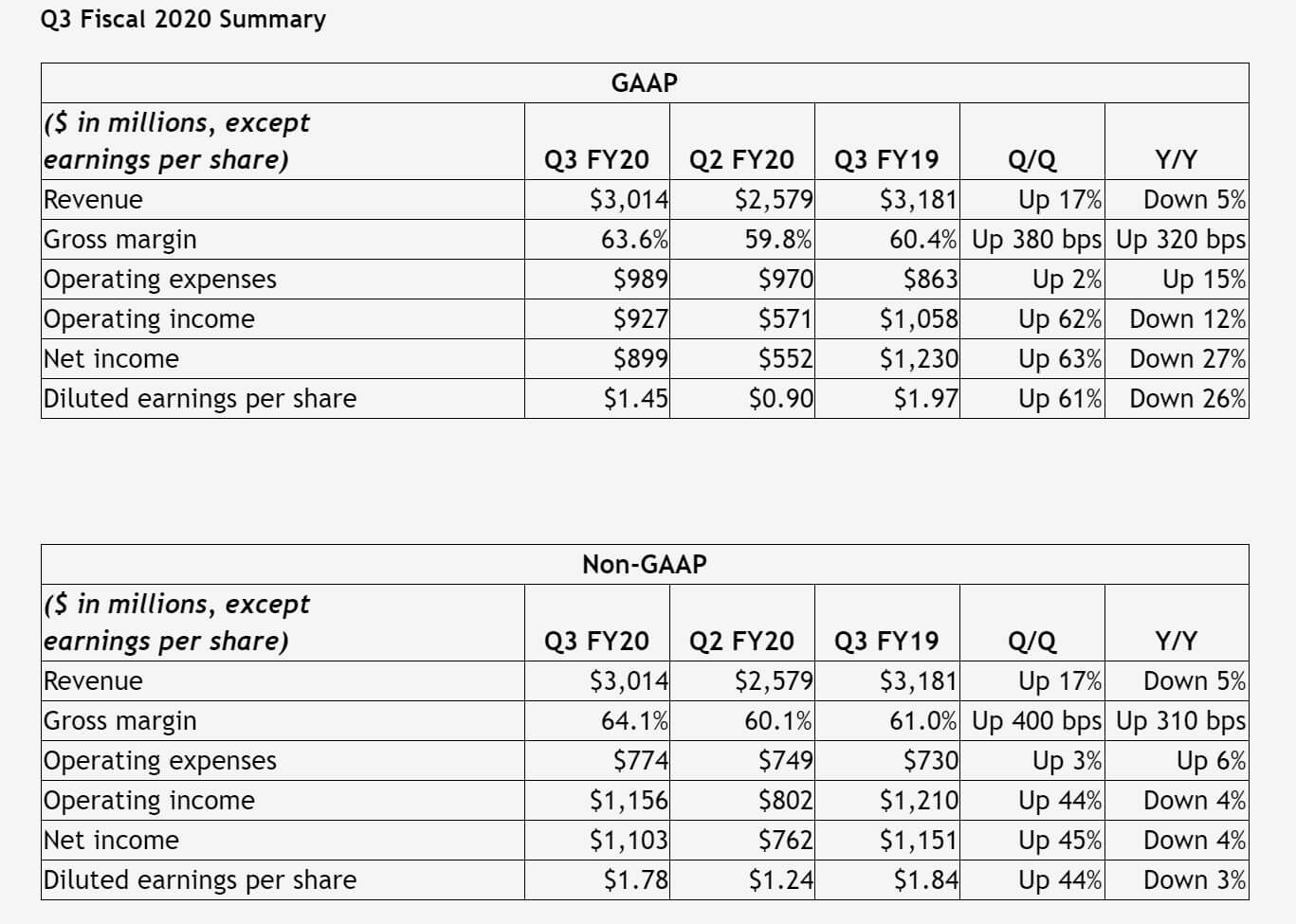

What just happened? Nvidia has revealed its third-quarter financial results, and while the company did beat expectations, revenue fell 5 percent compared to the same quarter one year ago.

In the quarter ending October 27, Nvidia reported revenue of $3.01 billion. While that’s down from the $3.18 billion a year earlier, it’s a 17 percent jump over the $2.58 billion from the previous quarter. Non-GAAP earnings per diluted share were $1.78, compared with $1.84 a year earlier and $1.24 in the previous quarter.

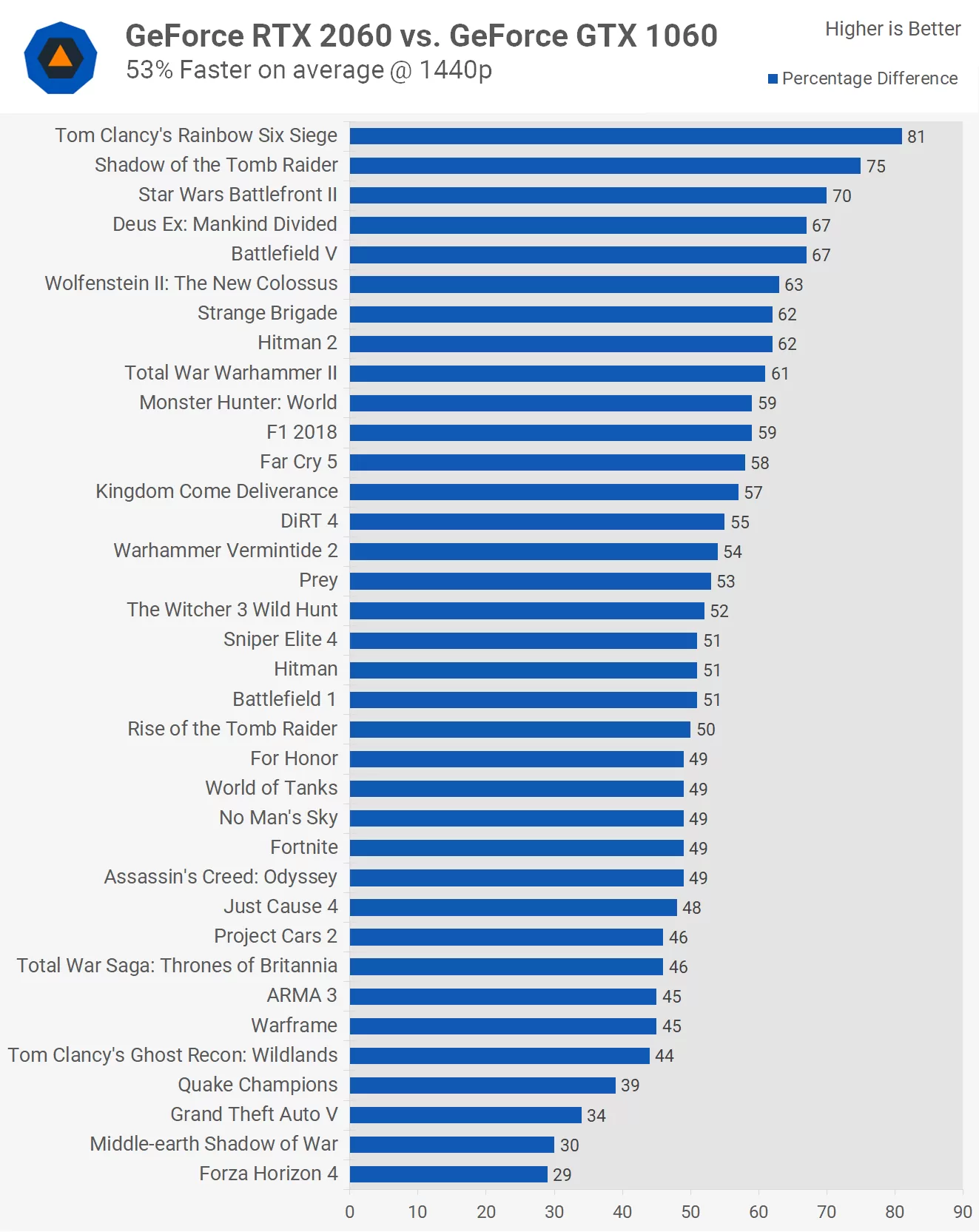

"Our gaming business and demand from hyperscale customers powered Q3’s results," said CEO Jensen Huang. "The realism of computer graphics is taking a giant leap forward with NVIDIA RTX."

Breaking down Nvidia’s segments, its GPU business revenue was down 8 percent to $2.56 billion, while gaming revenue was down 6 percent YoY to $1.66 billion but up 26 percent from the previous quarter. The quarterly increase was spurred by growth in its GeForce desktop and gaming GPUs.

Data center revenue was also down YoY, to $726 million, but the company believes the business will see strong sequential growth in this segment “driven by the rise of conversational AI and inference.” Its automobile sector, meanwhile, earned $162 million and the professional visualization segment hit $324 million, up 6 percent.

The figures beat expectations, with analysts looking for earnings at $1.57 per share on revenue of $2.91 billion. For the fourth quarter, Nvidia expects revenue of $2.95 billion, which is lower than Wall Street’s $3.06 billion prediction.